The Caesar Framework

The Caesar.jl package is an "umbrella" framework around other dedicated algorithmic packages. While most of the packages are implemented in native Julia (JuliaPro), a few dependencies are wrapped C libraries. Note that C/C++ can be incorporated with zero overhead, such as was done with AprilTags.jl.

AMP / IIF / RoME

Robot motion estimate (RoME.jl) can operate in the conventional SLAM manner, using local memory (dictionaries), or alternatively distribute over a persisted DistributedFactorGraph.jl through common serialization and graph storage/database technologies, see this article as example [1.3]. A variety of 2D plotting, 3D visualization, serialization, middleware, and analysis tools come standard as provided by the associated packages. RoME.jl combines reference frame transformations and robotics SLAM tool around the back-end solver provides by IncrementalInference.jl.

Details about the accompanying packages:

- IncrementalInference.jl supplies the algebraic logic for factor graph inference with Bayes tree and depends on several packages itself.

- RoME.jl introduces nodes and factors that are useful to robotic navigation.

- ApproxManifoldProducts.jl provides on-manifold belief product operations.

Visualization (Arena.jl/RoMEPlotting.jl)

Caesar visualization (plotting of results, graphs, and data) is provided by 2D and 3D packages respectively:

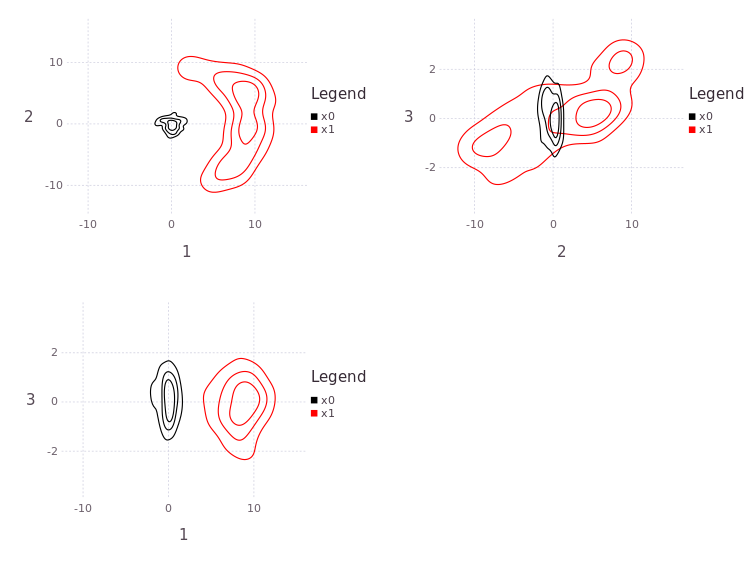

- RoMEPlotting.jl are a set of scripts that provide MATLAB style plotting of factor graph beliefs, mostly supporting 2D visualization with some support for projections of 3D;

- Arena.jl package, which is a collection of 3D visualization tools.

Multilanguage Interops: NavAbility.io SDKs and APIs

The Caesar framework is not limited to direct Julia use. Check out www.NavAbility.io, or contact directly at (info@navabiliyt.io), for more details. Also see the community multi-language page for details.

-

-