Technologies Getting Started Database Structure Collaborators Contribute

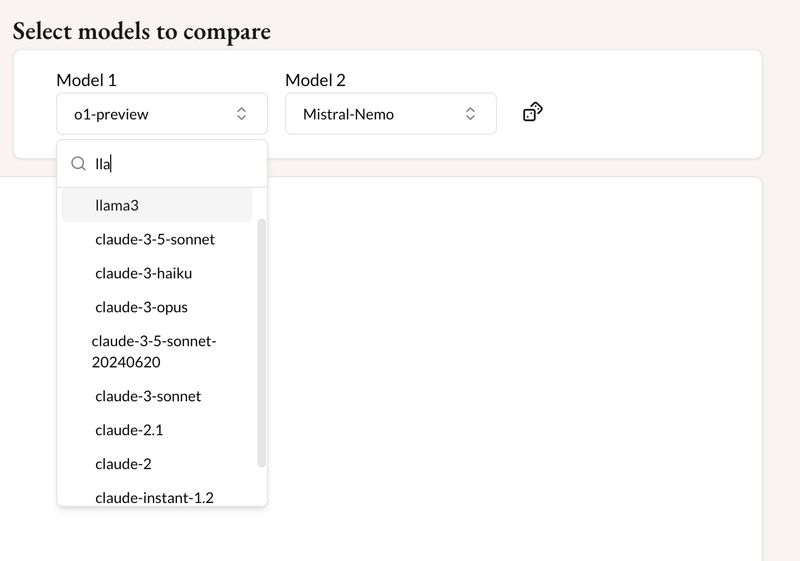

About An open-source LLM comparison tool that allows users to input prompts and compare the performance of language models in a blind test format. It has a wide range of supported models, from opensource ollama ones to the likes of Openai. Simply select two models, then test them across various prompts and scenarios tailored to your domain. Each round provides anonymized responses for evaluation, helping you gain a deeper understanding of each model’s capabilities. All collected data will be published to contribute to open-source research.

- React

- Typescript

- TailwindCss

Here you list all prerequisites necessary for running your project. For example:

- NodeJS

- Git

- [NPM]

- AWS S3 Buckets

- Supabase

How to clone your project

git clone https://github.com/Supahands/llm-comparison-frontendBefore running the server, you should duplicate .env.template and fill in your own environment variables such as AWS S3 and Supabase.

Then, run the development server:

npm run dev

# or

yarn dev

# or

pnpm dev

# or

bun devOpen http://localhost:3000 with your browser to see the result.

You can start editing the page by modifying app/page.tsx. The page auto-updates as you edit the file.

This project uses next/font to automatically optimize and load Geist, a new font family for Vercel.

The application uses Supabase with the following tables:

Stores the configuration for available LLM models:

id: Unique identifierprovider: The AI provider (e.g., OpenAI, Anthropic)model_name: Name of the model (e.g., GPT-4, Claude)disabled: Boolean flag to enable/disable models

Collects statistics and responses from model comparisons:

- Stores user choices and model performance metrics

- Used for analyzing model comparison results

Currently, we do not have Anthropic models (Claude) supported on the official site due to API costs. We are actively seeking sponsors to help integrate these models. If you have suggestions for including Anthropic models or would like to sponsor API access, please open an issue on our GitHub repository!

Want to help? Here's what you can do: - Open an issue with suggestions for Anthropic model integration - Reach out about sponsorship opportunities - Share alternative solutions for accessing Anthropic's API

Special thank you for all people that contributed for this project.

|

Hank |

Wei Ming Thor |

Evanz |

Noah Rijkaard |

Here you will explain how other developers can contribute to your project. For example, explaining how can create their branches, which patterns to follow and how to open an pull request

git clone https://github.com/Supahands/llm-comparison-frontendgit checkout -b feature/NAME- Follow commit patterns

- Open a Pull Request explaining the problem solved or feature made, if exists, append screenshot of visual modifications and wait for the review!