Tool for automated world-based analysis of wearable eye tracker data. gazeMapper is an open-source tool for automated mapping and processing of eye-tracking data to enable automated world-based analysis. gazeMapper can:

- Transform head-centered data to one or multiple planar surfaces in the world.

- Synchronize recordings from multiple participants, and external cameras.

- Determine data quality measures, e.g., accuracy and precision using glassesValidator (Niehorster et al., 2023).

If you use this tool or any of the code in this repository, please cite:

Niehorster, D.C., Hessels, R.S., Nyström, M., Benjamins, J.S. and Hooge, I.T.C. (submitted). gazeMapper: A tool for automated world-based analysis of gaze data from one or multiple wearable eye trackers. Manuscript submitted for publication, 2024 (BibTeX)

If you use the functionality for automatic determining the data quality (accuracy and precision) of wearable eye tracker recordings, please additionally cite:

Niehorster, D.C., Hessels, R.S., Benjamins, J.S., Nyström, M. and Hooge, I.T.C. (2023). GlassesValidator:

A data quality tool for eye tracking glasses. Behavior Research Methods. doi: 10.3758/s13428-023-02105-5 (BibTeX)

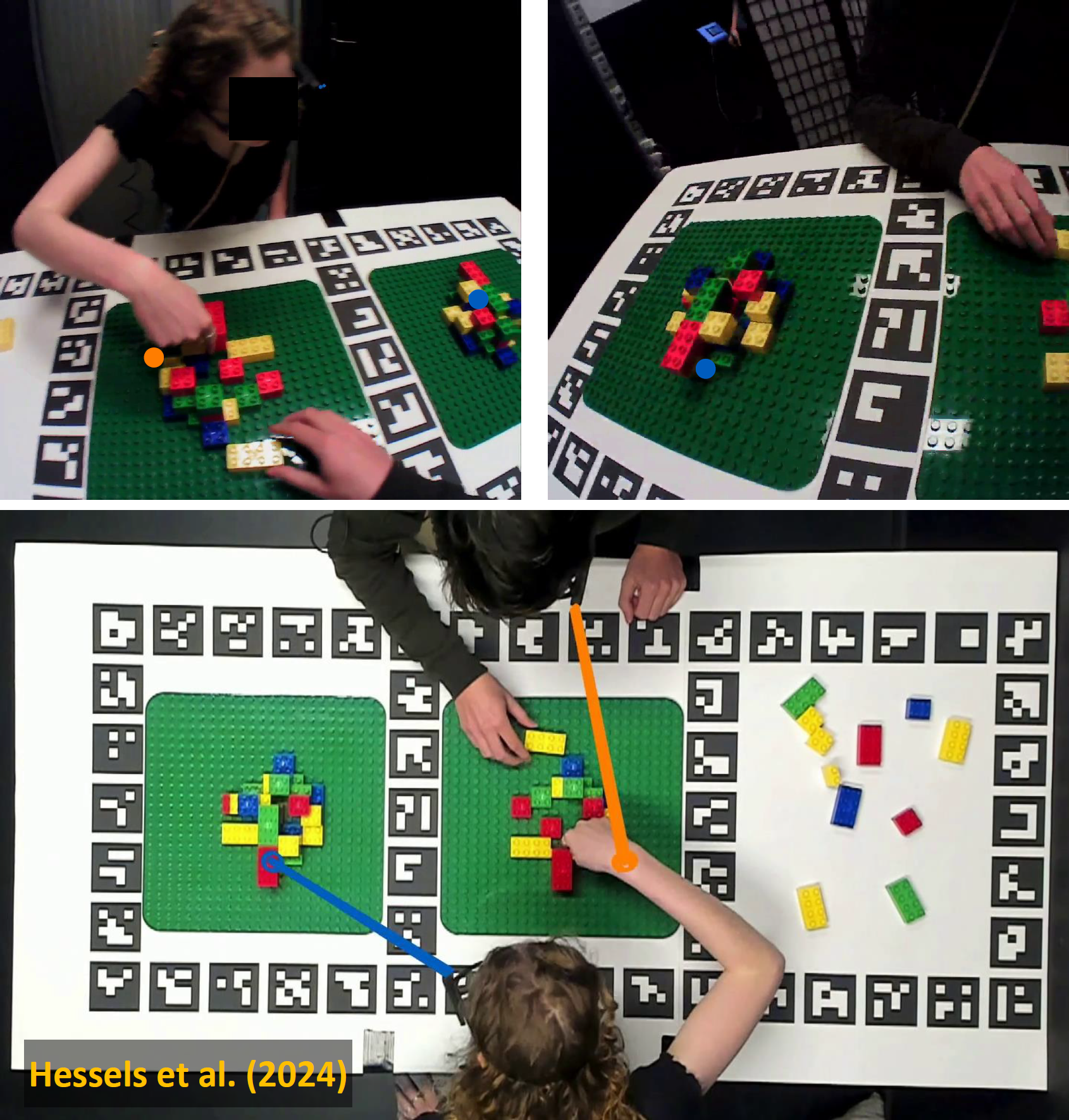

Example where gazeMapper has been used to map head-centered gaze from two head-worn eye tracker recordings to synchronized world-centered

gaze data of the pair, drawn on an overview video recording with an additional external camera. From Hessels, R.S., Iwabuchi, T., Niehorster, D.C., Funawatari, R., Benjamins, J.S., Kawakami, S.; Nyström, M., Suda, M., Hooge, I.T.C., Sumiya, M., Heijnen, J., Teunisse, M. & Senju, A. (submitted). Gaze behavior in face-to-face interaction: A cross-cultural investigation between Japan and the Netherlands. Manuscript submitted for publication, 2024

Example where gazeMapper has been used to map head-centered gaze from two head-worn eye tracker recordings to synchronized world-centered

gaze data of the pair, drawn on an overview video recording with an additional external camera. From Hessels, R.S., Iwabuchi, T., Niehorster, D.C., Funawatari, R., Benjamins, J.S., Kawakami, S.; Nyström, M., Suda, M., Hooge, I.T.C., Sumiya, M., Heijnen, J., Teunisse, M. & Senju, A. (submitted). Gaze behavior in face-to-face interaction: A cross-cultural investigation between Japan and the Netherlands. Manuscript submitted for publication, 2024

GazeMapper is available from https://github.com/dcnieho/gazeMapper, and supports Python 3.10 and 3.11 on Windows, MacOS and Linux (newer versions of Python should work fine but are not tested).

For Windows users who wish to use the gazeMapper GUI, the easiest way to acquire gazeMapper is to download a standalone executable. The standalone executable is not available for MacOS or Linux. Complete installation instruction for MacOS and Linux users are available below.

For users on Windows, Mac or Linux who wish to use gazeMapper in their Python code, the easiest way to acquire

gazeMapper is to install it directly into your Python distribution using the command

python -m pip install gazeMapper.

If you run into problems on MacOS to install the imgui_bundle package, you can

try to install it first with the command SYSTEM_VERSION_COMPAT=0 pip install --only-binary=:all: imgui_bundle. Note that this repository is pip-installable as well:

python -m pip install git+https://github.com/dcnieho/gazeMapper.git#egg=gazeMapper. NB: on some platforms you may have

to replace python with python3 in the above command lines.

Once pip-installed in your Python distribution, there are three ways to run the GUI on any of the supported operating systems:

- Directly in the terminal of your operating system, type

gazeMapperand run it (NB: this does not appear to work in Anaconda environments, use one of the below methods for those). - Open a Python console. From such a console, running the GUI requires only the following two lines of code:

import gazeMapper gazeMapper.GUI.run()

- If you run the gazeMapper's GUI from a script, make sure to wrap your script in

if __name__=="__main__". This is required for correct operation from a script because the GUI uses multiprocessing functionality. Do as follows:if __name__=="__main__": import gazeMapper gazeMapper.GUI.run()

Installing and running gazeMapper on a Mac will involve some use of the terminal. In this section we will show you step by step how to install, and then what to do every time you want to run gazeMapper.

Note that it is critical on MacOS that gazeMapper is installed natively, and not under Rosetta or Parallels. That will lead to an error when importing the polars package, and other unfixable errors.

- To acquire and manage Python, install Anaconda by following these instructions. Additional notes: a. Choose the graphical installer. b. When clicking the installer link provided in these instructions, you may first be shown a user registration page. You can click "skip registration" there to directly go to the download files. c. Ensure that you choose the correct installer for your system (Intel or Apple Silicon). If you are not sure what kind of system you have, consult this page to learn how to find out.

- Once anaconda is installed, open the Terminal application.

- You now first need to make an environment with the correct Python version in which you can then install gazeMapper. To do so, type

conda create -n gazeMapper-env python=3.11 pipand run the command.gazeMapper-envis the name of the environment you create with this comment. - Activate the environment you have just created:

conda activate gazeMapper-env. - Now you need to install gazeMapper. Do the following in the below order:

a. Type and run

SYSTEM_VERSION_COMPAT=0 pip install --only-binary=:all: imgui_bundle==1.5.2b. Type and runpip install gazeMapper.

If you already have gazeMapper installed but want to update it to the latest version, do the following:

- Open the Terminal application.

- Activate the environment in which you have gazeMapper installed: type and run

conda activate gazeMapper-env, wheregazeMapper-envis the name of the environment you created using the above instructions. If you use an environment with a different name, replace the name in the command. - Type and run

SYSTEM_VERSION_COMPAT=0 pip install --only-binary=:all: imgui_bundle==1.5.2 - Type and run

pip install gazeMapper --upgrade.

If you have followed the above instructions to install gazeMapper, do the following each time you want to run gazeMapper:

- Open the Terminal application.

- Activate the environment in which you have gazeMapper installed: type and run

conda activate gazeMapper-env, wheregazeMapper-envis the name of the environment you created using the above instructions. If you use an environment with a different name, replace the name in the command. - Start the Python interpreter: type and run

python. - Type and run

import gazeMapper. - Type and run

gazeMapper.GUI.run().

Installing and running gazeMapper on Linux will involve some use of the terminal. In this section we will show you step by step how to install, and then what to do every time you want to run gazeMapper.

- You may well already have Python installed on your machine. To check, type and run

python3 --versionin a terminal. If this command completes successfully and shows you have Python 3.10 or later, you can skip to step 3. - To install Python, check what is the appropriate command for your Linux distribution. Examples would be

sudo apt-get update && sudo apt-get install python3.11for Ubuntu and its derivatives, andsudo dnf install python3.11for Fedora. You can replacepython3.11in this command with a different version (minimum 3.10). - Make a new folder from where you want to run gazeMapper, e.g.

mkdir gazeMapper. Enter this folder:cd gazeMapper. - You now first need to make an environment in which you can then install gazeMapper. To do so, type

python3 -m venv .venvand run the command. - Activate the environment you have just created:

source .venv/bin/activate. - Now you need to install gazeMapper. To do so, type and run

pip install gazeMapper.

If you already have gazeMapper installed but want to update it to the latest version, do the following:

- Open the Terminal application.

- Navigate to the gazeMapper install location and activate the environment in which you have gazeMapper installed: type and run

source .venv/bin/activatein the location where you installed gazeMapper. - Type and run

pip install gazeMapper --upgrade.

If you have followed the above instructions to install gazeMapper, do the following each time you want to run gazeMapper:

- Open the Terminal application.

- Navigate to the gazeMapper install location and activate the environment in which you have gazeMapper installed: type and run

source .venv/bin/activatein the location where you installed gazeMapper. - Type and run

gazeMapper.

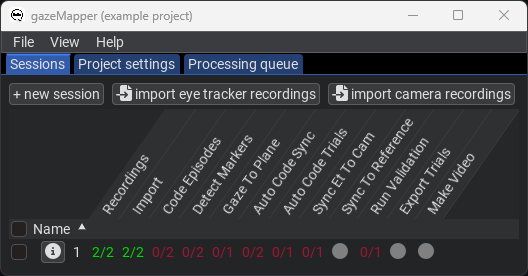

To use gazeMapper, first a recording session needs to be defined for the project. This entails telling gazeMapper which eye tracker and external camera recording(s) to expect, and what planes to map gaze to. The very simplest setup consists of a single eye tracker recording per session and a single plane to map gaze to, but multiple planes and any number of eye tracker and external camera recordings per session is supported. Sessions consisting of only a single external camera recording are not supported, at least one eye tracker recording should be included. Once this setup has been performed, new sessions can be created and recordings imported to the defined eye tracker and external camera recording slots of these new sessions. Once imported, further processing can commence.

The gazeMapper package includes a graphical user interface (GUI) that can be used to perform all configuration and processing. Below we describe three example workflows using the GUI. While most of gazeMapper's functionality will be used in these examples. For a full description of all configuration options, the reader is referred to the configuration section of this readme.

Besides using the GUI, advanced users can instead opt to call all of gazeMapper's functionality directly from their own Python scripts without making use of the GUI. The interested reader is referred to the API section below for further details regarding how to use the gazeMapper functionality directly from their own scripts.

Here we first present example workflows using the GUI. More detailed information about gazeMapper configuration is provided below. We strongly recommend new users to first work through these examples to see how gazeMapper works before starting on their own projects.

Examples:

- Example 1 is a minimum example, using a single eye tracker recording per session and a single plane to map gaze to, along with a glassesValidator setup for determining data quality.

- Example 2 is a more elaborate version of example 1, using two eye trackers recording per session that have to be synchronized and two planes to map gaze to (again along with a glassesValidator setup for determining data quality).

- Example 3 is a recording where a participants looks at and interacts with multiple planes, including a moving plane, and is furthermore observed with an external overview camera. As with the other examples, a glassesValidator setup is used for determining data quality.

The data recordings required for running these examples is available here. Output gaze-on-plane, validation and videos resulting from running these examples is available here.

Example data, stimulus presentation code and the configuration and coding files needed for exactly recreating the below steps are provided.

Example 1 is a minimum example, showing a short recording where a participant looks at a single plane on which three images are shown in sequence. At the start of the recording, the participant is furthermore asked to look at a glassesValidator poster (Niehorster et al., 2023), to make it possible to determine data quality measures for the recording. Finally, the glassesValidator poster is also used for synchronizing the eye tracker's gaze data with its scene camera. We strongly recommend collecting data for both synchronization and data quality determination for all wearable eye tracker recordings. The preparation, data recording, and data analysis for this example were performed as follows:

-

The researcher prepares the recording space. This involves

- Preparing a glassesValidator poster (see steps 1 and 2 here), either printed so it can be hung in a convenient location (best such that it is not in view of the eye-tracker's scene camera during the rest of the experiment), or shown on a projector or another computer screen.

- A plane to which the gaze data will be projected needs to be delineated using ArUco markers. While in this example we achieve that by presenting ArUco markers on a computer monitor together with the stimulus images, it could also be achieved by attaching ArUco markers to the edge of the screen. More generally, any planar surface can be used by placing a sufficient number of ArUco markers on it. While there are no hard requirements on the number of ArUco markers that need to be visible in a scene camera video frame for the mapping of gaze to the plane to succeed, it is recommended to aims for at the very minimum a handful of markers that cover a reasonable space to achieve a high probability that the plane is detected correctly. We recommend that users pilot test extensively, under the lighting conditions of the experiment and using participant behavior expected during the experiment (given that both lower light and faster participant movements may decrease the likelihood that markers are detected successfully).

It is important that the exact positions, sizes and orientations of the individual ArUco markers are known. This is required for gazeMapper to be able to correctly determine the observer's position and orientation with respect to the plane, allowing for gaze to be projected to the plane. This information has to be provided to gazeMapper. For more information, see gazeMapper planes.

-

A recording then proceeds as follows.

- First the participant is positioned in front of the glassesValidator poster and completes a glassesValidator data collection (see step 3 here). Note that in the example, the validation is not performed at arm's length, but at the distance at which the rest of the task will be completed. If a single distance is predominant during an experiment, using a specific distance for the validation data collection is recommended.

- After this, while the participant remains at the same position, they are instructed to continuously fixate the fixation target at the center of the validation poster while slowly nodding no with their head five times, and then slowly nodding yes five times.

- Next, the main task commences. In the example, the participant simply views a picture presented on the screen, and presses the spacebar when they are done viewing the picture. Each picture presentation is preceded and succeeded by a sequence of single ArUco markers that allows to automatically delineate the trials in the recording.

-

To start processing a recording with gazeMapper, a gazeMapper project first needs to be created and configured. If you want to skip the configuration and just quickly test out gazeMapper, you can create a new gazeMapper project following the first step below, and then simply replace the content of this project's config folder with the configuration provided here. Reopen the project in gazeMapper after replacing the configuration and skip to step 4.

-

Run gazeMapper and make a new gazeMapper project.

-

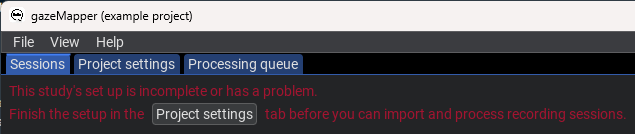

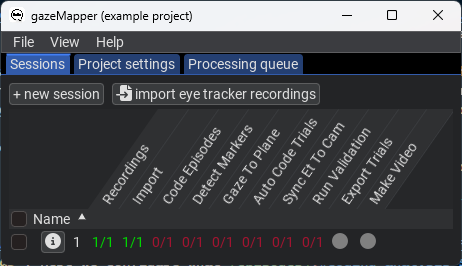

You will be greeted by the following screen, go to the

Project settingspane. -

First we have to tell gazeMapper what recordings to expect for a session, click on

Edit session definitionto do so. -

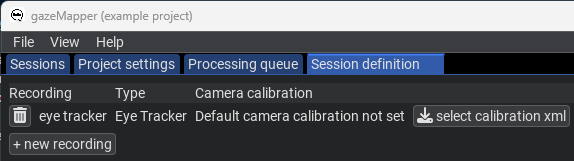

Click

+ new recordingto define a recording. Call it anything you wish, for instanceeye tracker, and selectEye Trackeras recording type. The screen will now look as follows. -

Back on the

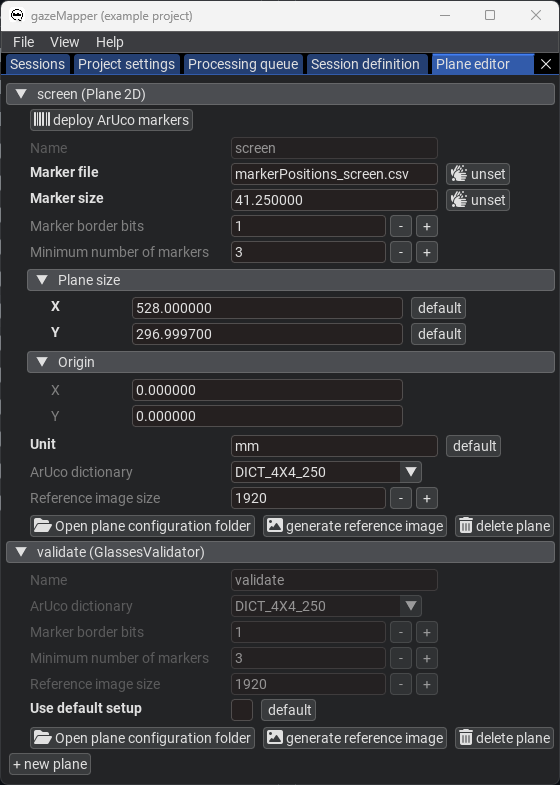

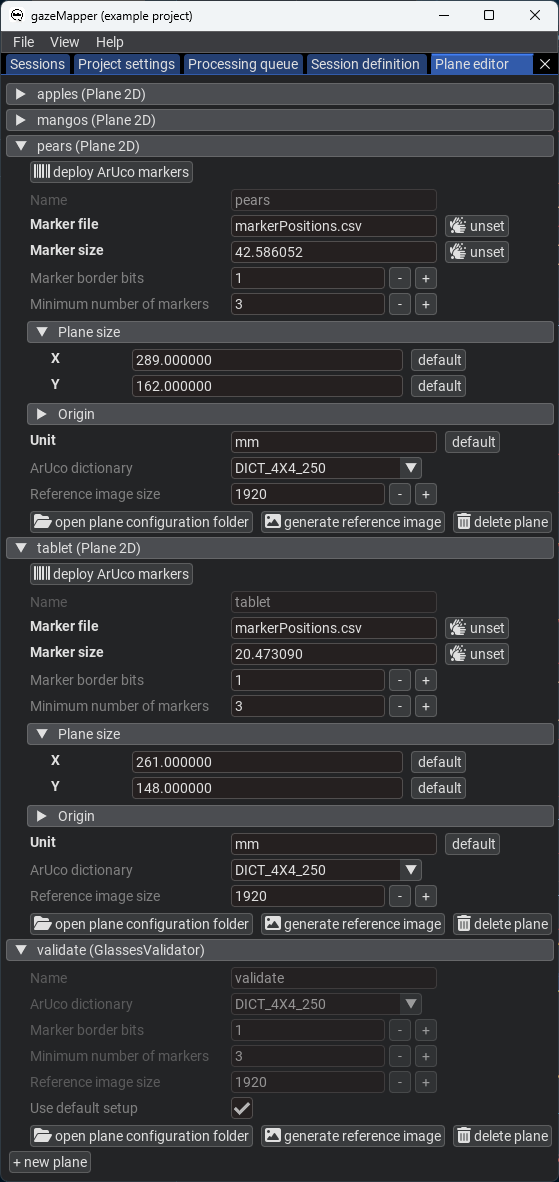

Project settingspane, click onEdit planes. Here we need to add both the glassesValidator poster and the stimulus screen as planes.- Click on

+ new planeand call itvalidate. SelectGlassesValidatoras plane type. - Since the example did not use the default glassesValidator poster printed on A2 format but instead presented the glassesValidator poster on screen, a custom glassesValidator setup is needed. To set this up, uncheck the

Use default setupbutton for the validate plane. Press theOpen plane configuration folderbutton to open the plane's configuration folder. The files there will have to be edited outside of gazeMapper to reflect the glassesValidator poster as presented on the computer screen. For this example, use the files available here, which were created based on calculations in the Excel sheet provided here. Besides custommarkerPositions.csvandtargetPositions.csvfiles to correctly specify the location of validation targets and ArUco markers on the screen, also thevalidationSetup.txtfile was edited to set the correct size for the ArUco markers (markerSidevariable), the validation targets' diameter (targetDiameter) and the size of the plane (gridColsandgridRows, which were set to the size of the monitor). Note that for glassesValidator, these sizes and positions are specified in cm, not mm. - Click on

+ new planeagain and call itscreen. SelectPlane 2Das plane type. - Place the

markerPositions_screen.csvfile in the screen plane's setup folder (again use theOpen plane configuration folderbutton if needed). In the GUI, provide the name of this file for theMarker fileparameter. This file was also created using the calculations in the Excel sheet provided here. For more information about such plane definition files, see below. - Further configure the plane: set the

Marker sizeto41.25, theUnittomm, and the plane size toX:528andY:296.9997. See the below image for the final plane configuration.

- Click on

-

Back on the

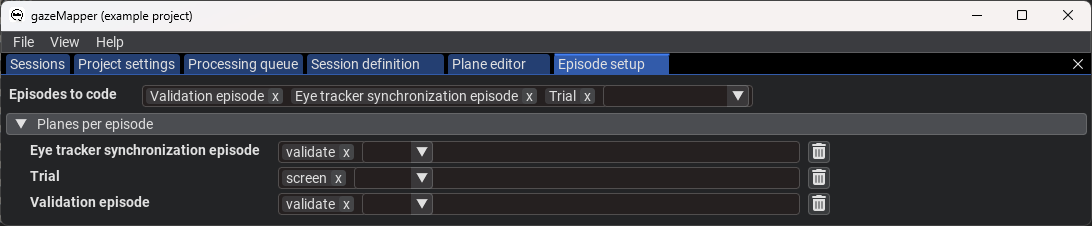

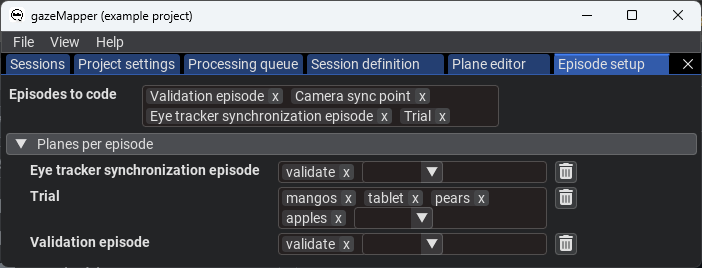

Project settingspane, click onEpisode setup. Here we configure what episodes can be coded, and what plane(s) are associated with each episode.- For the

Episodes to code, add theValidation episode,Eye tracker synchronization episodeandTrial. - Under

Planes per episode, add all three above items. Set thevalidateplane for theValidation episodeandEye tracker synchronization episode, and thescreenplane for theTrialepisode. This indicates that for episode in the recording coded as validation or synchronization episodes only thevalidateplane will be searched for and processed, while for trials thescreenplane will be used.

- For the

-

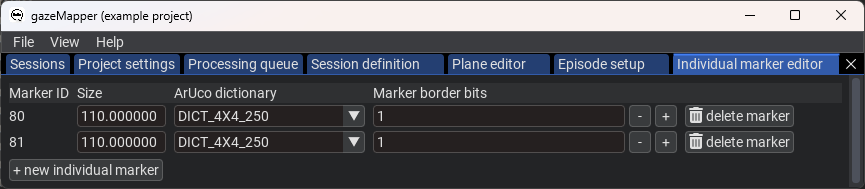

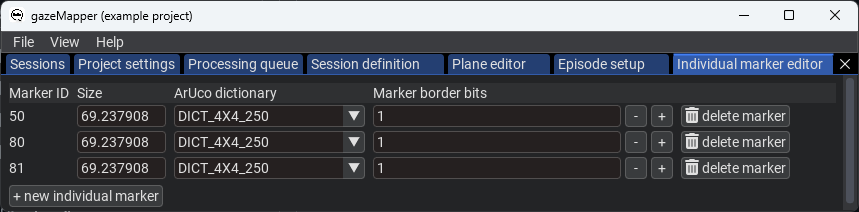

Next, detection of the markers to delineate trials needs to be configured. On the

Project settingspane, click onEdit individual markersto configure these. -

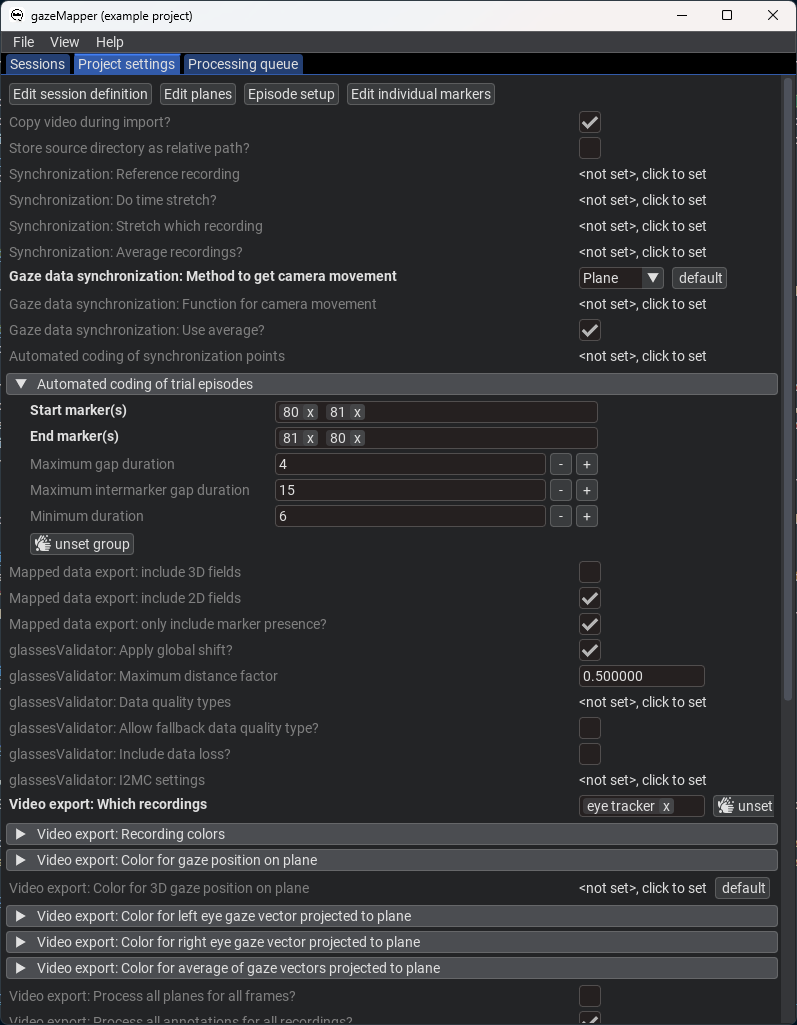

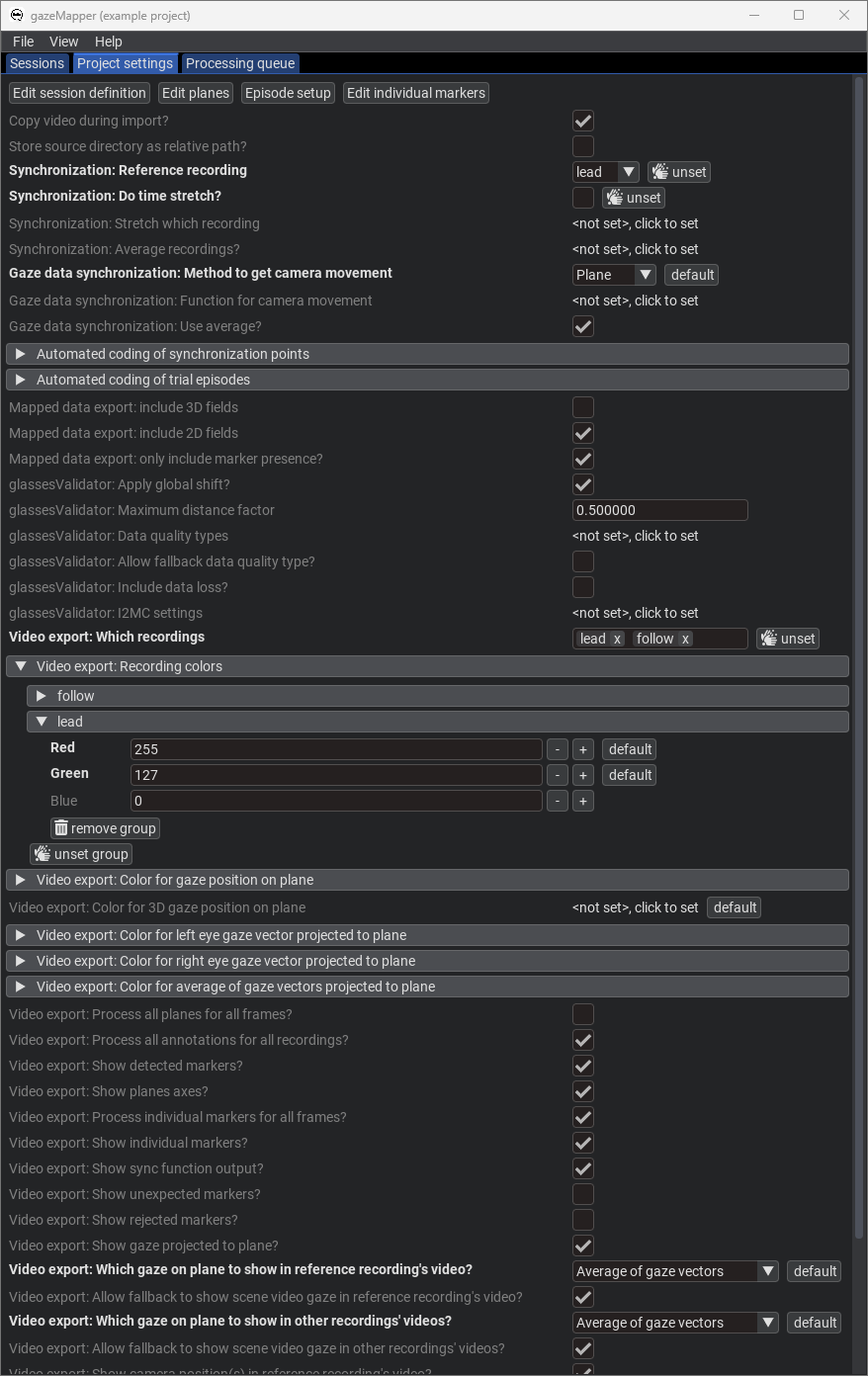

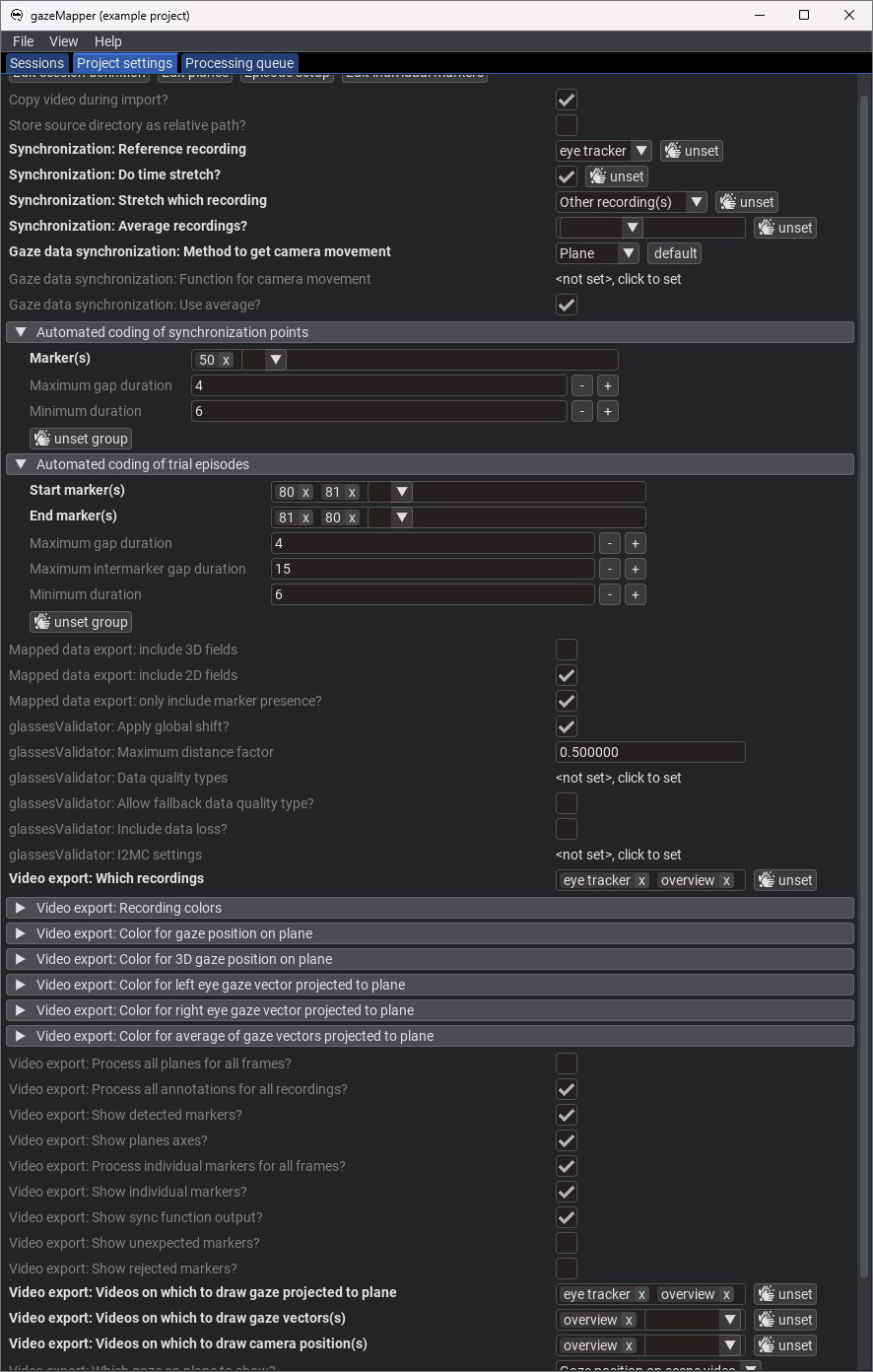

Some settings also need to be configured on the

Project settingspage itself. Specifically, we need to set:Gaze data synchronization: Method to get camera movementtoPlaneas we'll use the glassesValidator plane for synchronizing gaze data and the scene camera.- Set up

Automated coding of trial episodesby clicking onclick to set. Expand the created settings. SetStart marker(s)to80 81andEnd marker(s)to81 80, since these are the markers used in the example in that order to denote trial starts and ends. For the rest of the settings the defaults are ok. Note that also different markers or marker sequences can be used for starts and ends. - When processing the recording, we want to output a scene video with detected markers and gaze projected to the validation and screen planes. To set this up, set

Video export: which recordingstoeye tracker, the name of the recording we defined in the session definition. - Furthermore, set up a color with which to draw gaze in the

Video export: recording colorsfield, using the colorRed:255,Green:127andBlue:0. - Set

Video export: Videos on which to draw gaze projected to planetoeye tracker. - Finally, open

Video export: Color for 3D gaze position on the planeand clickUnset groupto unset that color, which means that that gaze type will not be drawn on the video.

-

-

Now that the project is set up, we are ready to import and process recordings.

-

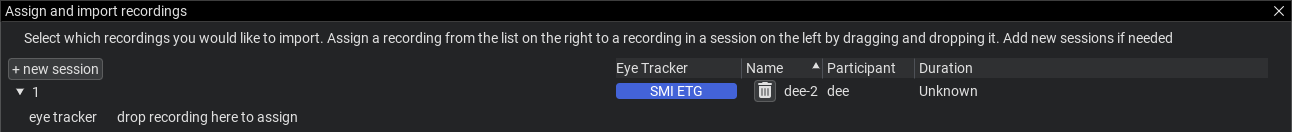

On the

Sessionpane, clickimport eye tracker recordings. There, select the folder containing the example data and indicate its anSMI ETGrecording. Note that you could also trigger import by drag-dropping a data folder onto gazeMapper. Note also that an SMI ETG recording has to be prepared before it can be imported by gazeMapper. This preparation has already been performed for the included example data. -

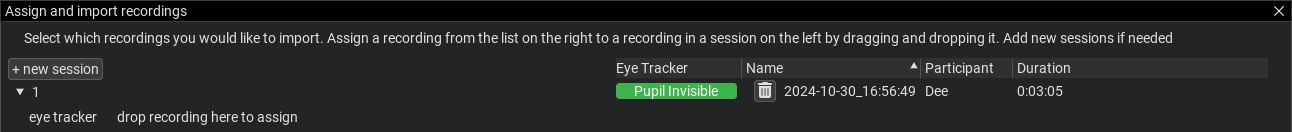

On the window that pops up, click

+ new session, and name the session1(or any name you like). Expand session1. You should now see the following: -

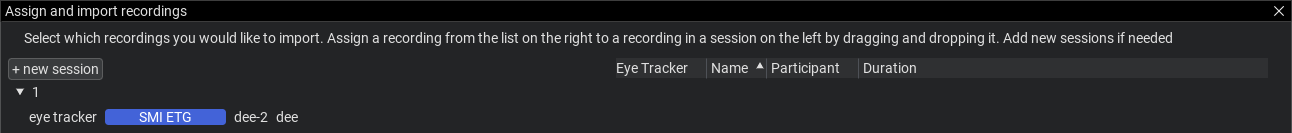

Assign the recording to the session by dragging it from the right, and dropping it on the left where it says

drop recording here. -

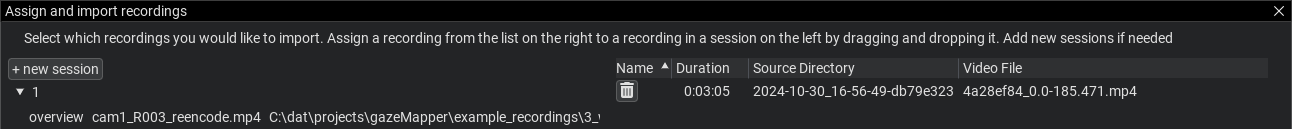

Click

Continue. The recording will now be imported. When this is done, you should see the following: -

Next, run the

Detect markersaction by right-clicking on session1and selecting the action from the menu. This will detect all markers in the video, including those needed for automatic trial coding. -

When the

Detect markersaction is finished, run theAuto code trialsaction. -

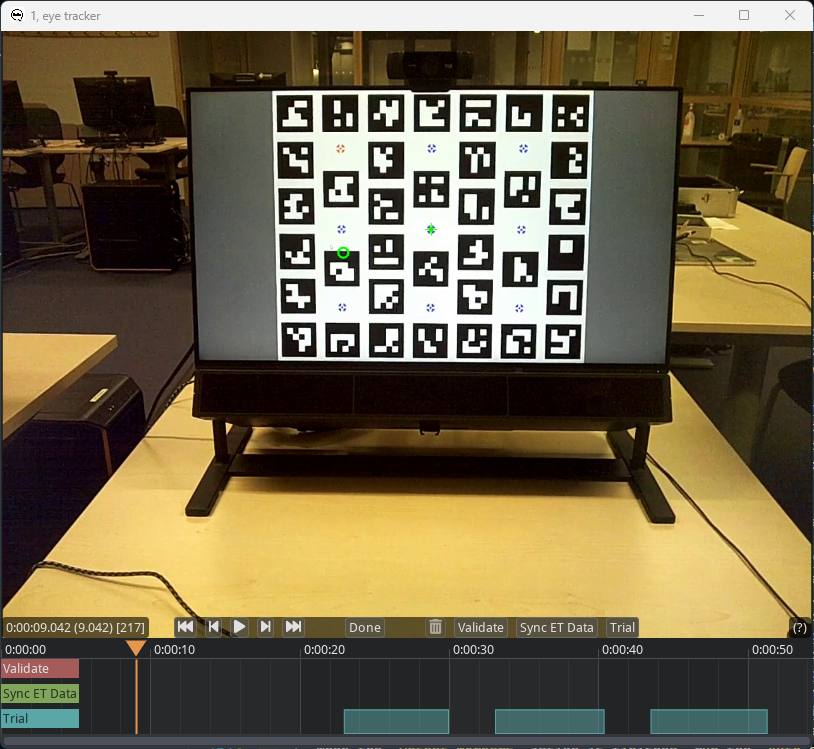

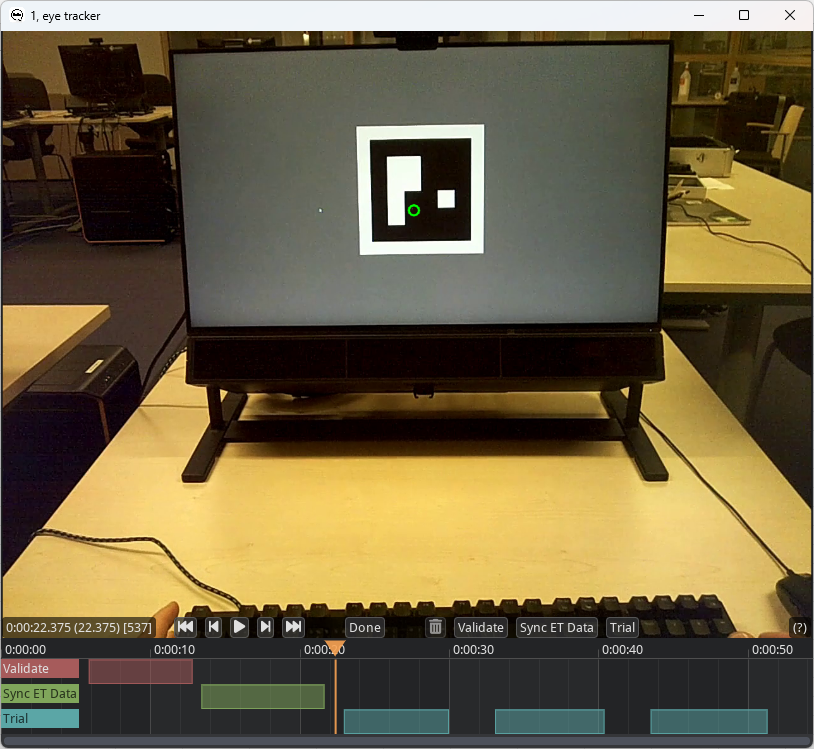

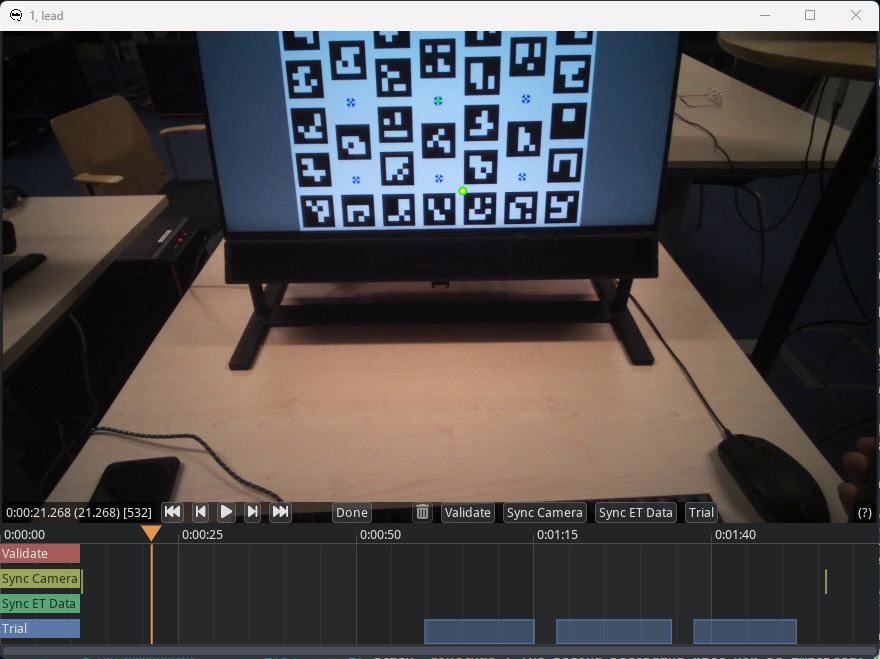

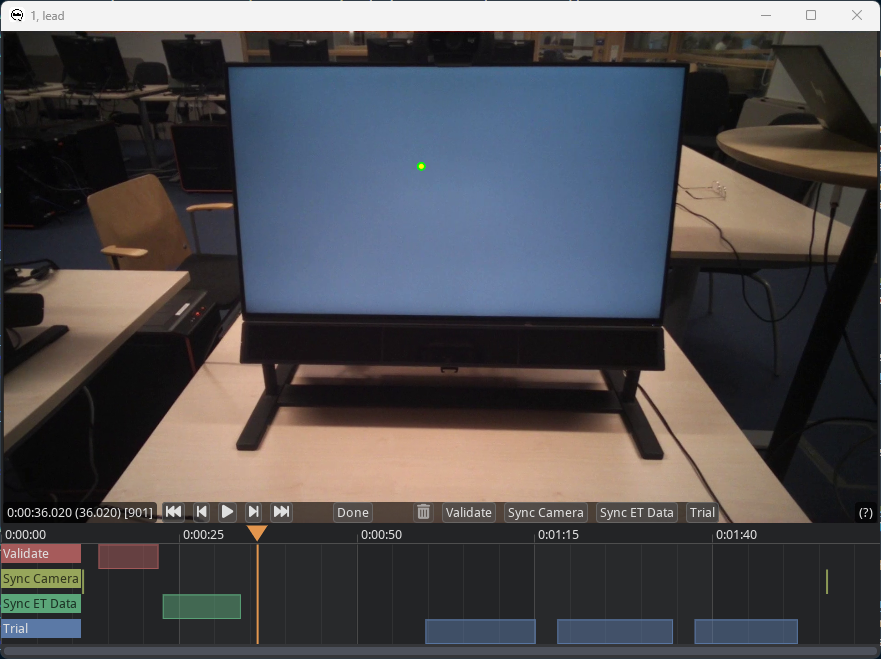

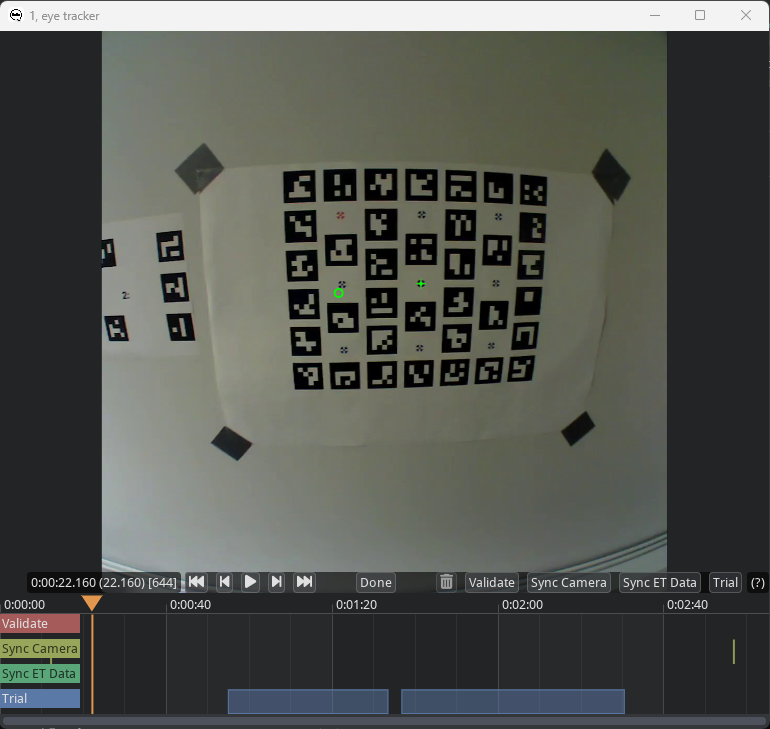

Next, run the

Code episodesaction. This will bring up a coding GUI used for annotating where the episodes of interest are in the recording.Trialshould already have been coded (see image below), use the GUI to check if trial starts and ends have been accurately determined. -

Use the seek functionality in the coder (you can press on the timeline underneath the video at the same height as the orange triangle, or use the video player controls) to find the start of the validation episode. Code the validation interval as described in step 5 in the glassesValidator manual. Furthermore, code the eye tracker synchronization episode as the beginning of the fixation on the center validation target before the participant starts nodding no and yes, and the end of the episode as the end of the fixation on the center validation target after the nodding:

Press

Doneto close the episode coding GUI. The coding file for this example can be found here. -

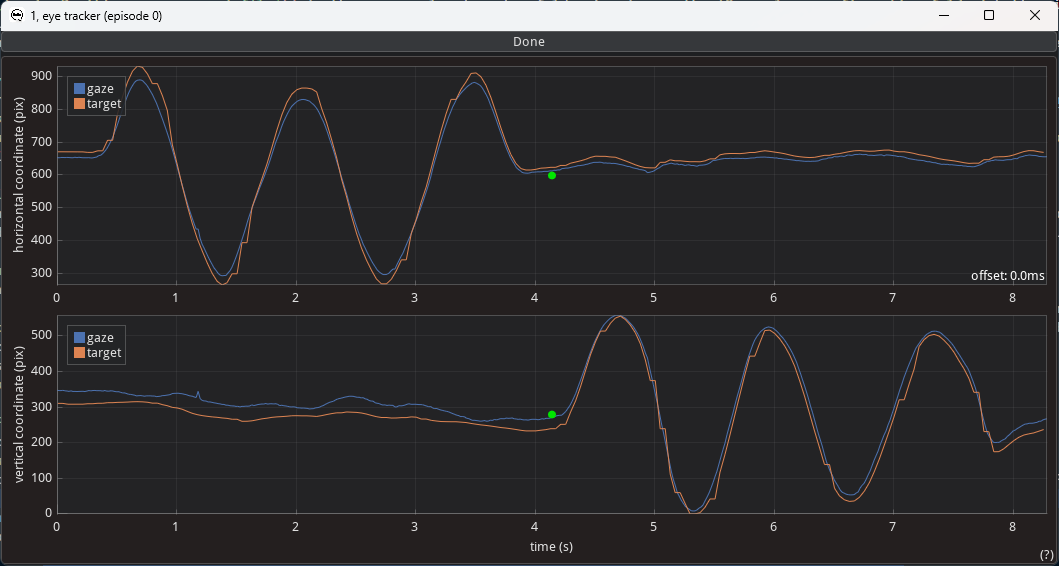

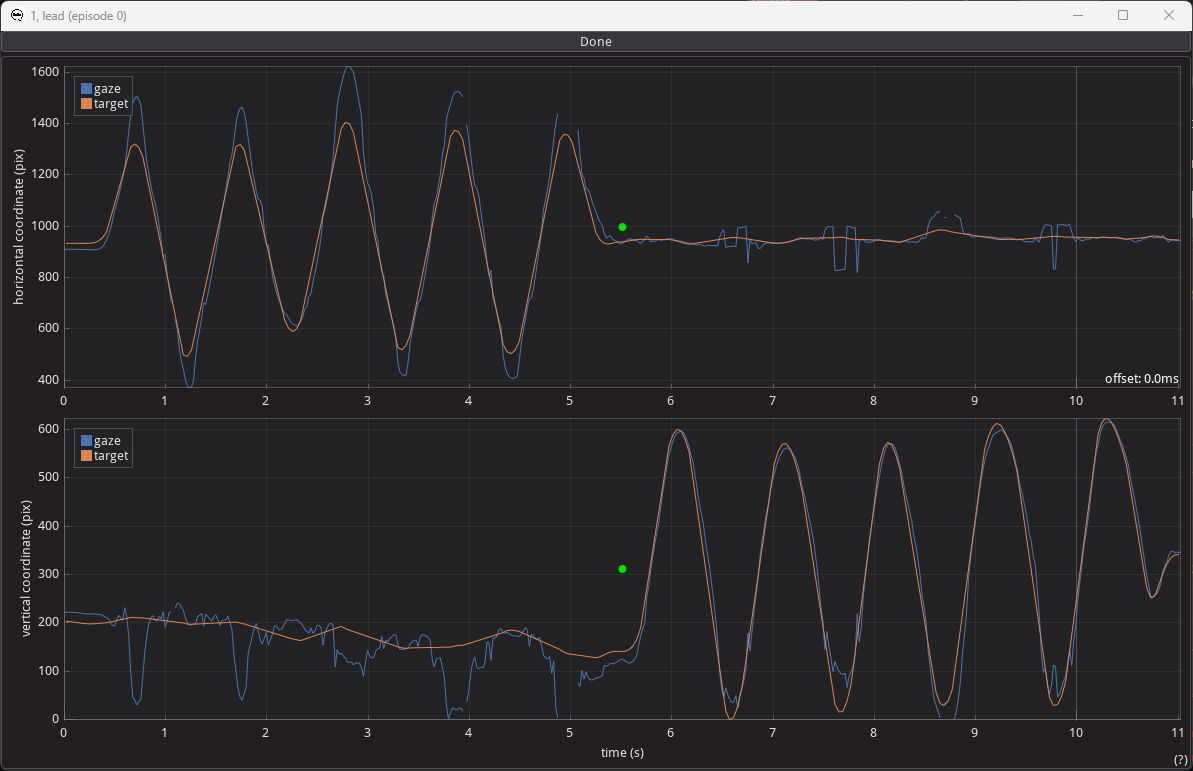

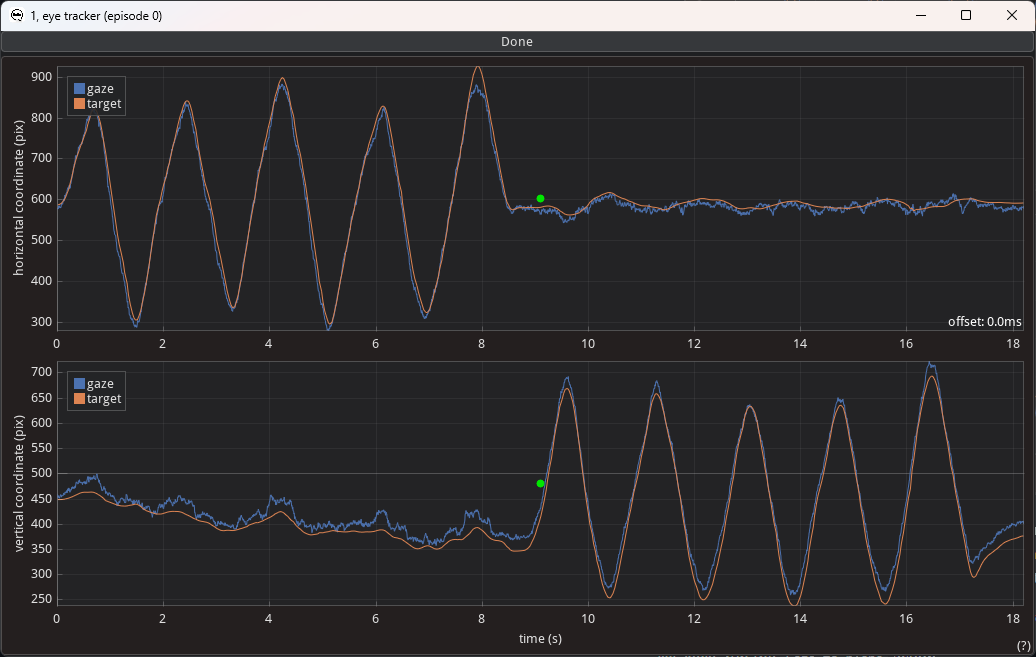

Next, run the

Sync et to camaction. This will open the following window:To align the two signals in time with each other, drag the green dot in the middle of either plot. The horizontal offset is the applied time shift (indicated by the value in the lower-right corner of the upper plot). Any vertical shift is not stored, but can be useful when aligning the two signals. When done aligning the two signals, press done atop the window.

-

Next, run the

Gaze to planeaction. -

Next, run the

Validationaction. -

Finally, run the

Make videoaction, which draws the detected markers, the participant's gaze and the projection of that gaze to the plane on the scene video, along with information about the episode annotations. -

Now, you can export the gaze data projected to the plane, the created video and the glassesValidator data quality measures to a folder of your choosing using the

Export trialsaction. An export for this example after following the above steps is available here.

-

Example 2 is an extension of example 1, scaling it up to recording two participants who are simultaneously recorded while viewing both their own and each others' screens on which three images are shown in sequence. At the start of the recording, like in example 1, both participants are furthermore asked to look at a glassesValidator poster (Niehorster et al., 2023), to make it possible to determine data quality measures for the recording. Finally, the glassesValidator poster is also used for synchronizing the eye tracker's gaze data with its scene camera. We strongly recommend collecting data for both synchronization and data quality determination for all wearable eye tracker recordings.

Example data, the configuration and the coding files needed for exactly recreating the below steps are provided. The stimulus presentation code is the same example 1, except that for one of the stimulus presentation computers sync_marker_qshow should be set to true, and for the other computer plane_which should be set to 2. Note that to keep the example simple, image presentation on the two systems was not synchronized other than by the two participants attempting to synchronize their key presses. Any real experiment would likely synchronize stimulus presentation on the two computers, e.g. using Lab Streaming Layer.

Steps 1 and 2 are mostly the same as for Example 1, with the following additional points to note:

- The two participants perform validation with glassesValidator poster, and nodding no and yes for eye tracker synchronization one after the other. This ensures that only one glassesValidator is shown at a time across the stimulus computers. Should both posters be presented at the same time, troubles may arise where part of one poster may be visible for the other eye tracker. This would severely interfere with the ability to determine where the glassesValidator plane is, cause the output of the validation routine to be wildly off. Should simultaneous validation be required, a second glassesValidator poster should be made using a set of markers that does not overlap with the markers used on the first poster. See the glassesValidator manual for instructions about customizing the poster. More generally, care should be taken to only use unique markers during a recording and not have any marker appear in more than one plane or as one of the trial delineation or synchronization markers.

- The stimulus presentation program now also shows synchronization markers at the start and end of the recording. These are used to provide visual transients in both scene camera streams that can be used to synchronize the two recordings together. Using ArUco markers, these transients can be automatically detected, but any transient visible to all cameras can be used and the moment at which it occurs manually coded.

Nonetheless, below we provide a full description of how recording preparation, data recording, and data analysis for this example were performed:

-

The researcher prepares the recording space. This involves

- Preparing a glassesValidator poster (see steps 1 and 2 here), either printed so it can be hung in a convenient location (best such that it is not in view of the eye-tracker's scene camera during the rest of the experiment), or shown on a projector or another computer screen. It is important that the exact size of the ArUco markers and their location is known.

- A plane to which the gaze data will be projected needs to be delineated using ArUco markers. While in this example we achieve that by presenting ArUco markers on a computer monitor together with the stimulus images, it could also be achieved by attaching ArUco markers to the edge of the screen. More generally, any planar surface can be used by placing a sufficient number of ArUco markers on it. While there are no hard requirements on the number of ArUco markers that need to be visible in a scene camera video frame for the mapping of gaze to the plane to succeed, it is recommended to aims for at the very minimum a handful of markers that cover a reasonable space to achieve a high probability that the plane is detected correctly. We recommend that users pilot test extensively, under the lighting conditions of the experiment and using participant behavior expected during the experiment (given that both lower light and faster participant movements may decrease the likelihood that markers are detected successfully).

It is important that the exact positions, sizes and orientations of the individual ArUco markers are known. This is required for gazeMapper to be able to correctly determine the observer's position and orientation with respect to the plane, allowing for gaze to be projected to the plane. This information has to be provided to gazeMapper. For more information, see gazeMapper planes.

-

A recording then proceeds as follows.

- Both participants are positioned in front of the stimulus screens. One participant is designated the

lead, the other thefollower(they could for instance be teacher and student, and also the names used could of course be anything). - Both participants look at the screen of the lead participant, where an ArUco marker is shown for automatic synchronization.

- One participant is first presented with the glassesValidator poster and completes a glassesValidator data collection (see step 3 here). Note that in the example, the validation is not performed at arm's length, but at the distance at which the rest of the task will be completed. If a single distance is predominant during an experiment, using a specific distance for the validation data collection is recommended.

- After this, while the participant remains at the same position, they are instructed to continuously fixate the fixation target at the center of the validation poster while slowly nodding no with their head five times, and then slowly nodding yes five times.

- When the first participant is done, the other participant performs the same validation and synchronization procedure from the last two steps.

- The main task commences. In the example, the participants simply view pictures presented on their screen and also look at each other's screens. They press the spacebar when they are done viewing the picture, after which a new picture is shown. Rough synchronization between the presentations is maintained by the participants attempting to press the spacebar in synchrony. On both screens, each picture presentation is preceded and succeeded by a sequence of single ArUco markers that allows to automatically delineate the trials in the recording. Note however that the trial delineation of the lead recording will be used by both recordings in gazeMapper.

- Finally, both participants look at the screen of the lead participant again, where another ArUco marker is shown for synchronization.

- Both participants are positioned in front of the stimulus screens. One participant is designated the

-

To start processing a recording with gazeMapper, a gazeMapper project first needs to be created and configured. If you want to skip the configuration and just quickly test out gazeMapper, you can create a new gazeMapper project following the first step below, and then simply replace the content of this project's config folder with the configuration provided here. Reopen the project in gazeMapper after replacing the configuration and skip to step 4.

-

Run gazeMapper and make a new gazeMapper project.

-

You will be greeted by the following screen, go to the

Project settingspane. -

First we have to tell gazeMapper what recordings to expect for a session, click on

Edit session definitionto do so. -

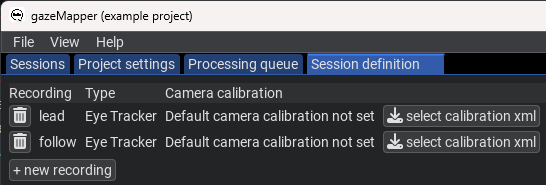

Click

+ new recordingto define two recordings. We'll call oneleadand the otherfollow. SelectEye Trackeras recording type for both. The screen will now look as follows. -

Back on the

Project settingspane, click onEdit planes. Here we need to add both the glassesValidator poster and the stimulus screen as planes.- Click on

+ new planeand call itvalidate. SelectGlassesValidatoras plane type. - Since the example did not use the default glassesValidator poster printed on A2 format but instead presented the glassesValidator poster on screen, a custom glassesValidator setup is needed. To set this up, uncheck the

Use default setupbutton for the validate plane. Press theOpen plane configuration folderbutton to open the plane's configuration folder. The files there will have to be edited outside of gazeMapper to reflect the glassesValidator poster as presented on the computer screen. For this example, use the files available here, which were created based on calculations in the Excel sheet provided here. Besides custommarkerPositions.csvandtargetPositions.csvfiles to correctly specify the location of validation targets and ArUco markers on the screen, also thevalidationSetup.txtfile was edited to set the correct size for the ArUco markers (markerSidevariable), the validation targets' diameter (targetDiameter) and the size of the plane (gridColsandgridRows, which were set to the size of the monitor). Note that for glassesValidator, these sizes and positions are specified in cm, not mm. - Add two more planes using the

+ new planebutton. Call themlead_screenandfollow_screen. SelectPlane 2Das plane type for both. - Place the

markerPositions_screen.csvfiles found here for the screens in the respective screen plane's setup folder (again use theOpen plane configuration folderbutton if needed). In the GUI, provide the names of these files for theMarker fileparameter. These files were also created using the calculations in the Excel sheet provided here. For more information about such plane definition files, see below. - Further configure both planes: set the

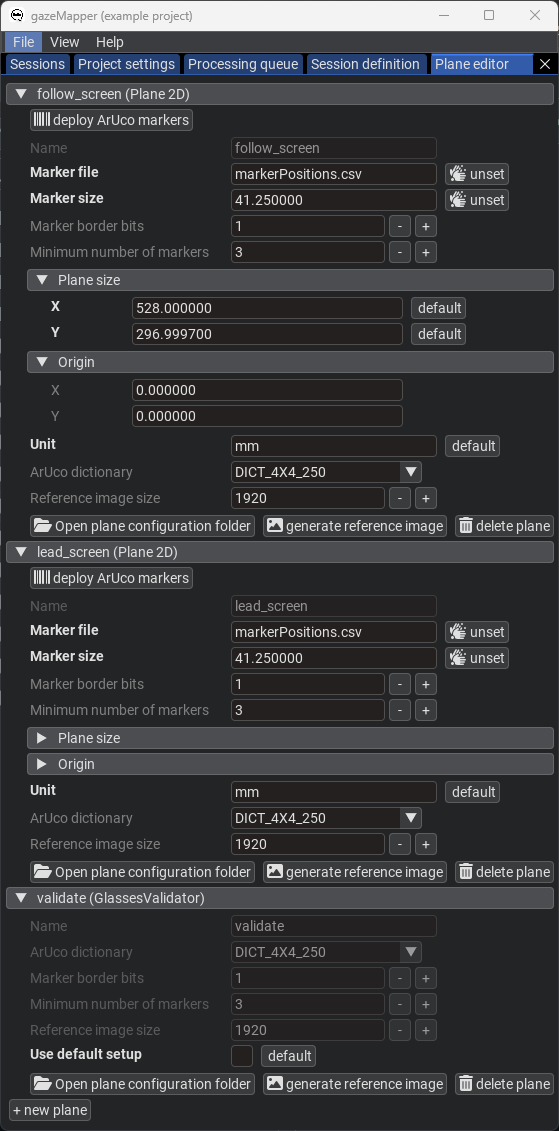

Marker sizeto41.25, theUnittomm, and the plane size toX:528andY:296.9997. See the below image for the final plane configuration.

- Click on

-

Back on the

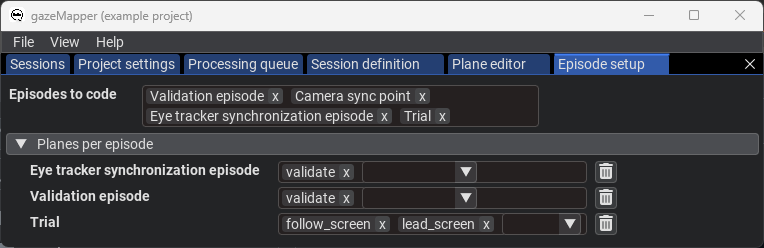

Project settingspane, click onEpisode setup. Here we configure what episodes can be coded, and what plane(s) are associated with each episode.- For the

Episodes to code, add theValidation episode,Camera sync point,Eye tracker synchronization episodeandTrial. - Under

Planes per episode, add theValidation episode,Eye tracker synchronization episodeandTrialitems. Set thevalidateplane for theValidation episodeandEye tracker synchronization episode, and the twoscreenplanes for theTrialepisode. This indicates that for episode in the recording coded as validation or synchronization episodes only thevalidateplane will be searched for and processed, while for trials thescreenplanes will be used.

- For the

-

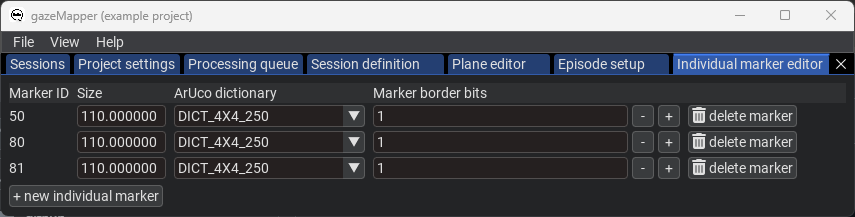

Next, detection of the markers to delineate trials needs to be configured. On the

Project settingspane, click onEdit individual markersto configure these. -

Some settings also need to be configured on the

Project settingspage itself. Specifically, we need to set:Synchronization: Reference recordingtolead.Synchronization: Do time stretch?to False (unchecked/disabled).Gaze data synchronization: Method to get camera movementtoPlaneas we'll use the glassesValidator plane for synchronizing gaze data and the scene camera.- Set up

Automated coding of synchronization episodesby clicking onclick to set. Expand the created settings. SetMarker(s)to50. For the rest of the settings the defaults are ok. Note also that multiple markers could be used as synchronization points. - Set up

Automated coding of trial episodesby clicking onclick to set. Expand the created settings. SetStart marker(s)to80 81andEnd marker(s)to81 80, since these are the markers used in the example in that order to denote trial starts and ends. For the rest of the settings the defaults are ok. Note that also different markers or marker sequences can be used for starts and ends. - When processing the recording, we want to output scene videos for both with detected markers and gaze of both participants projected to the validation and screen planes. To set this up, set both

leadandfollow(the names of the recordings we defined in the session definition) forVideo export: which recordings. - Furthermore, set up the colors with which to draw gaze in the

Video export: recording colorsfield. PressAdd itemand add both theleadandfollowrecordings. Forlead, use the colorRed:255,Green:127andBlue:0. Forfollow, use the colorRed:0,Green:95andBlue:191. - Open

Video export: Color for 3D gaze position on the planeand clickUnset groupto unset that color, which means that that gaze type will not be drawn on the video. - Set

Video export: Videos on which to draw gaze projected to plane,Video export: Videos on which to draw gaze vectors(s)andVideo export: Videos on which to draw camera position(s)all to bothfollowandlead. - For

Video export: Which gaze on plane to show?selectAverage of gaze vectors. This will use, if the eye tracker provides it, the intersection of the gaze vectors with the plane instead of the gaze position on the scene video. This may be more accurate, depending on the assumptions in the eye tracker for determining the scene position on the gaze video. Users are recommended to pilot what setting is most suitable for their project and equipment.

-

-

Now that the project is set up, we are ready to import and process recordings.

-

On the

Sessionpane, clickimport eye tracker recordings. There, select the folder containing the example data and indicate you're looking forTobii Glasses 2recordings. Note that you could also trigger import by drag-dropping a data folder onto gazeMapper. -

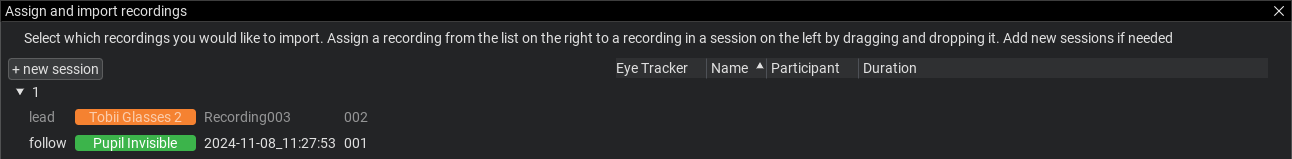

On the window that pops up, click

+ new session, and name the session1(or any name you like). Expand session1. You should now see the following: -

Assign the recording to the session by dragging it from the right, and dropping it on the left for the

leadrecording where it saysdrop recording here. ClickContinue. The recording will now be imported. -

Again click

import eye tracker recordings, select the folder containing the example data and this time indicate you're looking forPupil Invisiblerecordings. Assign the found Pupil Invisible recording to thefollowrecording.Note that in real use cases with more recordings that you can assign multiple recordings to one or multiple sessions in one go using this dialogue.

-

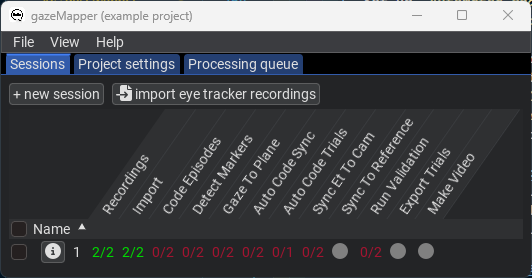

Click

Continue. The second recording will now be imported. When this is done, you should see the following: -

Next, run the

Detect markersaction by right-clicking on session1and selecting the action from the menu. This will detect all markers in the video, including those needed for automatic trial coding. -

When the

Detect markersaction is finished, run theAuto code syncandAuto code trialsactions. -

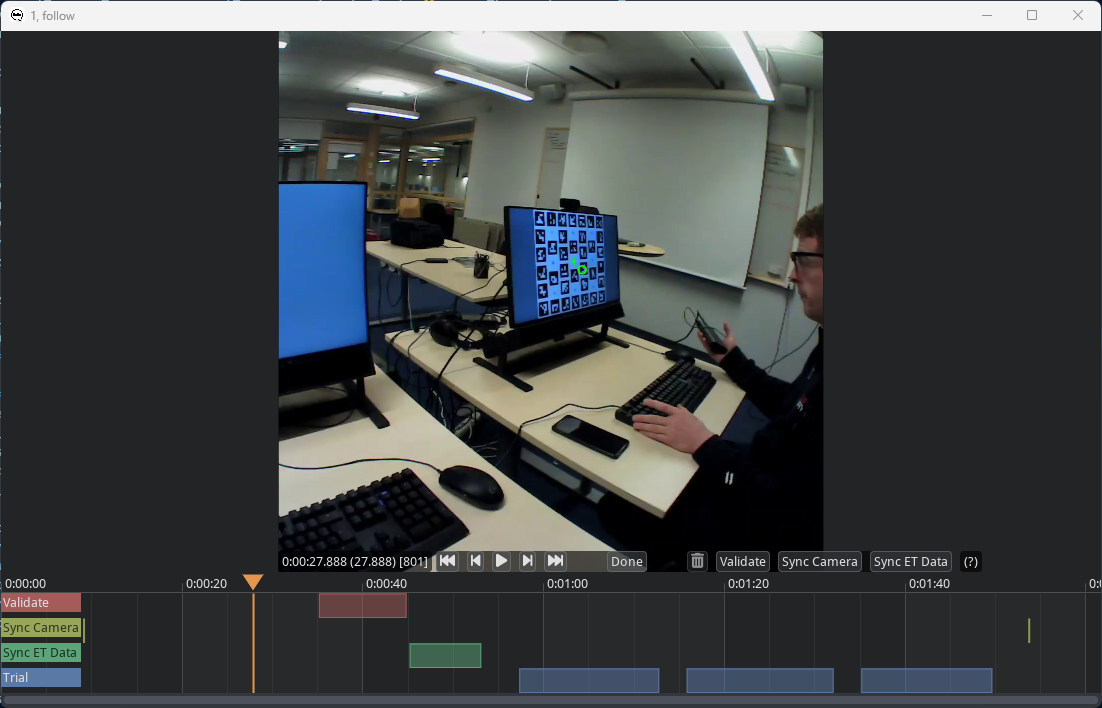

Next, run the

Code episodesaction. This will bring up a coding GUI used for annotating where the episodes of interest are in the recording.TrialandSync camerashould already have been coded (see image below), use the GUI to check if trial starts and ends and the synchronization timepoints have been accurately determined. -

Use the seek functionality in the coder (you can press on the timeline underneath the video at the same height as the orange triangle, or use the video player controls) to find the start of the validation episode. Code the validation interval as described in step 5 in the glassesValidator manual. Furthermore, code the eye tracker synchronization episode as the beginning of the fixation on the center validation target before the participant starts nodding no and yes, and the end of the episode as the end of the fixation on the center validation target after the nodding:

Press

Doneto close the episode coding GUI. -

The coding GUI for the other recording of the session will now appear. Also code the validation and eye tracker synchronization episode for this recording. Once done it should look as follows:

Press

Doneto close the episode coding GUI. The coding files for this example can be found here. -

Next, run the

Sync et to camaction. This will open the following window:To align the two signals in time with each other, drag the green dot in the middle of either plot. The horizontal offset is the applied time shift (indicated by the value in the lower-right corner of the upper plot). Any vertical shift is not stored, but can be useful when aligning the two signals. When done aligning the two signals, press done atop the window.

-

Next, run the

Sync to referenceaction to synchronize the two recordings together. -

Next, run the

Gaze to planeaction. -

Next, run the

Validationaction. -

Finally, run the

Make videoaction, which draws the detected markers, the participant's gaze and the projection of that gaze to the plane on the scene video, along with information about the episode annotations. -

Now, you can export the gaze data projected to the plane, the created video and the glassesValidator data quality measures to a folder of your choosing using the

Export trialsaction. An export for this example after following the above steps is available here.

-

Example data, the configuration and the coding files needed for exactly recreating the below steps are provided. The stimulus material and used planes are also available.

Example 3 is a more advanced example, showing a recording of a participant looking at and interacting with multiple planes while also being filmed with an external overview camera. The participant is performing a super simplified stock counting task, where they count how many apples, pear and mangos are on images on an iPad and then report their counts on papers spread across the scene. At the start of the recording, the participant is furthermore asked to look at a glassesValidator poster (Niehorster et al., 2023), to make it possible to determine data quality measures for the recording. Finally, the glassesValidator poster is also used at both the start and the end of the recording for synchronizing the eye tracker's gaze data with its scene camera. The preparation, data recording, and data analysis for this example were performed as follows:

-

The researcher prepares the recording space. This involves

- Printing a glassesValidator poster (see steps 1 and 2 here) and hanging it on the wall.

- Preparing the stimulus material on the iPad, including ArUco markers to define the stimulus plane, and ArUco markers for automatic delineation of trials and automatic synchronization of the eye tracker and the overview camera.

- Multiple further planes for the fruit counts have to be printed and distributed around the recording space.

- Set up an external camera. Set it up such that both the observer and the various planes are in view during the whole recording, such that a sufficient number of markers is visible in each recording, and use that reflections of the environment on the tablet do not cause issues with later processing of the recording. Note here that during pilot recordings of this example, we ran into issues with ArUco markers being reflected in the tablet and being detected by gazeMapper, and with the lighting in the room making part of the tablet screen illegible. To solve this, we have adjusted camera positions and selectively switched off lights to alleviate these issues.

As in the previous examples, it is important that the exact positions, sizes and orientations of the individual ArUco markers are known. This is required for gazeMapper to be able to correctly determine the observer's position and orientation with respect to each plane, allowing for gaze to be projected to these planes. This information has to be provided to gazeMapper. For more information, see gazeMapper planes.

-

A recording then proceeds as follows.

- First the participant holds up the iPad so that the overview camera can see the contents of its screen, and also looks at the iPad. They then activate the PowerPoint presentation on the iPad, which flashes a synchronization marker on the screen, which will be used in analysis to synchronize the overview camera to the eye tracker recording.

- Then, the participant is positioned in front of the glassesValidator poster and completes a glassesValidator data collection (see step 3 here).

- After this, while the participant remains at the same position, they are instructed to continuously fixate the fixation target at the center of the validation poster while slowly nodding no with their head five times, and then slowly nodding yes five times.

- Next, the main task commences. The participant again picks up the iPad and continues the PowerPoint presentation. Next in the presentation two ArUco markers are shown in sequence that allows to automatically delineate the trials in the recording. Then, a stimulus image is shown. The participant counts the number of apples, pear and mangos in the image, and writes their count on the paper for the respective fruit. Once done, they continue the presentation. A sequence of ArUco markers is shown signalling the end of the trial, and thereafter another sequence signalling the start of the next trial. The participant again writes down their fruit count and advances the presentation, upon which the trial end ArUco marker sequence is again shown.

- They then stand in front of the glassesValidator poster and again slowly nod no with their head five times, and then slowly nod yes five times while maintaining fixation of the fixation target at the center of the validation poster.

- Finally, the participant holds up the iPad to the overview camera and advances the presentation, upon which another ArUco marker for synchronization is shown.

-

To start processing a recording with gazeMapper, a gazeMapper project first needs to be created and configured. If you want to skip the configuration and just quickly test out gazeMapper, you can create a new gazeMapper project following the first step below, and then simply replace the content of this project's config folder with the configuration provided here. Reopen the project in gazeMapper after replacing the configuration and skip to step 4.

-

Run gazeMapper and make a new gazeMapper project.

-

You will be greeted by the following screen, go to the

Project settingspane. -

First we have to tell gazeMapper what recordings to expect for a session, click on

Edit session definitionto do so. -

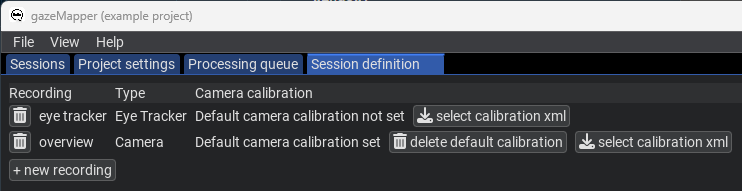

Click

+ new recordingto define two recordings. We'll call oneeye trackerand the otheroverview. SelectEye Trackeras recording type for the eye tracker recording, and Camera as the type for the overview recording. Next, add a camera calibration file for the camera recording (this is not needed for the eye tracker recordings, as the used eye tracker provides a calibration for the scene camera). To do so, click theselect calibration xmlbutton, and load this file. The screen will now look as follows. -

Back on the

Project settingspane, click onEdit planes. Here we need to add the glassesValidator poster, the iPad screen and the three answer sheets as planes.- Click on

+ new planeand call itvalidate. SelectGlassesValidatoras plane type. Since the default A2 glassesValidator poster was used, this plane requires no further configuration. - Add four more planes using the

+ new planebutton. Call themtabletandapples,pearsandmangos. SelectPlane 2Das plane type for all four. - Place the

markerPositions.csvfiles found here for the four planes in the respective plane's setup folders (use theOpen plane configuration folderbutton in gazeMapper to find the plane's folder, if needed). In the GUI, provide the names of these files for theMarker fileparameter. These files were created using the calculations in the Excel sheet provided here. For more information about such plane definition files, see below. - Further configure the planes: for the tablet plane, set the

Marker sizeto20.47309, set it to42.586052for the other three planes. For the tablet plane, set the plane size toX:261andY:148, for the other three planes set it toX:189andY:162. For all four planes, set theUnittomm. See the below image for part of the final plane configuration.

- Click on

-

Back on the

Project settingspane, click onEpisode setup. Here we configure what episodes can be coded, and what plane(s) are associated with each episode.- For the

Episodes to code, add theValidation episode,Camera sync point,Eye tracker synchronization episodeandTrial. - Under

Planes per episode, add theValidation episode,Eye tracker synchronization episodeandTrialitems. Set thevalidateplane for theValidation episodeandEye tracker synchronization episode, and the four other planes for theTrialepisode. This indicates that for episodes in the recording coded as validation or synchronization episodes only thevalidateplane will be searched for and processed, while for trials the other four planes will be used.

- For the

-

Next, detection of the markers to delineate trials needs to be configured. On the

Project settingspane, click onEdit individual markersto configure these. -

Some settings also need to be configured on the

Project settingspage itself. Specifically, we need to set:Synchronization: Reference recordingtoeye tracker.Synchronization: Do time stretch?to True (checked/enabled).Synchronization: Stretch which recording?toOther recording(s).- Click

Synchronization: Average recordings?to create the setting, but do not assign any recording, since we do not want to use this functionality. Gaze data synchronization: Method to get camera movementtoPlaneas we'll use the glassesValidator plane for synchronizing gaze data and the scene camera.- Set up

Automated coding of synchronization episodesby clicking onclick to set. Expand the created settings. SetMarker(s)to50. For the rest of the settings the defaults are ok. Note also that multiple markers could be used as synchronization points. - Set up

Automated coding of trial episodesby clicking onclick to set. Expand the created settings. SetStart marker(s)to80 81andEnd marker(s)to81 80, since these are the markers used in the example in that order to denote trial starts and ends. For the rest of the settings the defaults are ok. Note that also different markers or marker sequences can be used for starts and ends. - When processing the recording, we want to output scene videos for both with detected markers and gaze of both participants projected to the validation and screen planes. To set this up, set both

eye trackerandoverview(the names of the recordings we defined in the session definition) forVideo export: which recordings. - Furthermore, set up the colors with which to draw gaze in the

Video export: recording colorsfield. PressAdd itemand add theeye trackerrecording (the overview recording cannot be added as it has no gaze data). For theeye trackerrecording, use the colorRed:255,Green:127andBlue:0. - Finally set

Video export: Videos on which to draw gaze projected to plane,Video export: Videos on which to draw gaze vectors(s)andVideo export: Videos on which to draw camera position(s)all tooverview. ForVideo export: Videos on which to draw gaze projected to plane, also specifyeye tracker. SetVideo export: Gaze position marginto0.1.

-

-

Now that the project is set up, we are ready to import and process recordings.

-

On the

Sessionpane, clickimport eye tracker recordings. There, select the folder containing the example data and indicate you're looking forPupil Invisiblerecordings. Note that you could also trigger import by drag-dropping a data folder onto gazeMapper. -

On the window that pops up, click

+ new session, and name the session1(or any name you like). Expand session1. You should now see the following: -

Assign the recording to the session by dragging it from the right, and dropping it on the left for the

eye trackerrecording where it saysdrop recording here. ClickContinue. The recording will now be imported. -

Click

import camera recordings, select the folder containing the example data and click continue. Assign the found camera recording (cam1_R003_reencode.mp4) to theoverviewrecording.Note that in real use cases with more recordings that you can assign multiple recordings to one or multiple sessions in one go using these dialogues.

-

Click

Continue. The camera recording will now be imported. When this is done, you should see the following: -

Next, run the

Detect markersaction by right-clicking on session1and selecting the action from the menu. This will detect all markers in the video, including those needed for automatic trial coding. -

When the

Detect markersaction is finished, run theAuto code syncandAuto code trialsactions. -

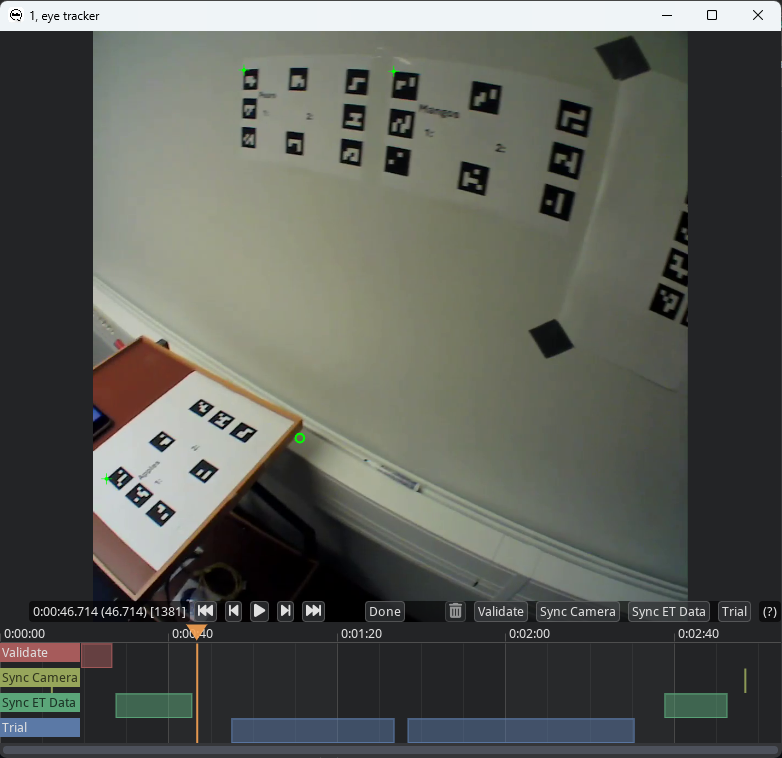

Next, run the

Code episodesaction. This will bring up a coding GUI used for annotating where the episodes of interest are in the recording.TrialandSync camerashould already have been coded (see image below), use the GUI to check if trial starts and ends and the synchronization timepoints have been accurately determined. -

Use the seek functionality in the coder (you can press on the timeline underneath the video at the same height as the orange triangle, or use the video player controls) to find the start of the validation episode. Code the validation interval as described in step 5 in the glassesValidator manual. Furthermore, code the eye tracker synchronization episode as the beginning of the fixation on the center validation target before the participant starts nodding no and yes, and the end of the episode as the end of the fixation on the center validation target after the nodding. Code both the episode at the beginning of the recording and the episode near the end of the recording:

Press

Doneto close the episode coding GUI. -

The coding GUI for the other recording of the session will now appear. Here too, check that the synchronization timespoints are correctly coded. Press

Doneto close the episode coding GUI. The coding files for this example can be found here. -

Next, run the

Sync et to camaction. This will open the following window:To align the two signals in time with each other, drag the green dot in the middle of either plot. The horizontal offset is the applied time shift (indicated by the value in the lower-right corner of the upper plot). Any vertical shift is not stored, but can be useful when aligning the two signals. When done aligning the two signals, press done atop the window.

-

Next, run the

Sync to referenceaction to synchronize the two recordings together. -

Next, run the

Gaze to planeaction. -

Next, run the

Validationaction. -

Finally, run the

Make videoaction, which draws the detected markers, the participant's gaze and the projection of that gaze to the plane on the scene video, along with information about the episode annotations. -

Now, you can export the gaze data projected to the plane, the created video and the glassesValidator data quality measures to a folder of your choosing using the

Export trialsaction. An export for this example after following the above steps is available here.

-

The gazeMapper GUI organizes recordings into a project folder. Each session to be processed is represented by a folder in this project folder, and one or multiple recordings are stored in subfolders of a session folder. After importing recordings, all further processing is done inside these session and recording folders. The source directories containing the original recordings remain

untouched when running gazeMapper. A gazeMapper project folder furthermore contains a folder config specifying the configuration

of the project. So an example directory structure may look like:

my project/

├── config/

│ ├── plane 1/

│ ├── plane 2/

│ └── validation plane/

├── session 01/

│ ├── teacher/

│ ├── student/

│ └── overview camera/

├── session 02/

│ ├── teacher/

│ ├── student/

│ └── overview camera/

...

where session 01 and session 02 are individual recording session, each made up of a teacher, a student and an overview camera recording. plane 1 and plane 2 contain definitions of planes that gazeMapper will map gaze to and validation plane is an additional plane used for validation of the eye tracker's calibration using glassesValidator, see below for documentation.

When not using the GUI and running gazeMapper using your own scripts, such a project folder organization is not required. Working folders

for a session can be placed anywhere (though recording folders should be placed inside a session folder), and a folder for a custom configuration can also be placed anywhere (but its location needs to be provided using the config_dir argument of all the functions in gazeMapper.process). The gazeMapper.process functions simply take the path to a session or recording folder.

During the importing and processing of a session, or an eye tracker or camera recording, a series of files are created in the working folder of the session and the recording(s). These are the following (not all of the files are created for a camera recording, for instance, there is no gaze data associated with such a recording):

| file | location | produced by |

description |

|---|---|---|---|

calibration.xml |

recording | Session.import_recording |

Camera calibration parameters for the (scene) camera. |

frameTimestamps.tsv |

recording | Session.import_recording |

Timestamps for each frame in the (scene) camera video. |

gazeData.tsv |

recording | Session.import_recording |

Gaze data cast into the glassesTools common format used by gazeMapper. Only for eye tracker recordings. |

recording_info.json |

recording | Session.import_recording |

Information about the recording. |

recording.gazeMapper |

recording | Session.import_recording |

JSON file encoding the state of each recording-level gazeMapper action. |

worldCamera.mp4 |

recording | Session.import_recording |

Copy of the (scene) camera video (optional, depends on the import_do_copy_video option). |

coding.tsv |

recording | process.code_episodes |

File denoting the analysis, synchronization and validation episodes to be processed. This is produced with the coding interface included with gazeMapper. Can be manually created or edited to override the coded episodes. |

planePose_<plane name>.tsv |

recording | process.detect_markers |

File with information about plane pose w.r.t. the (scene) camera for each frame where the plane was detected. |

markerPose_<marker ID>.tsv |

recording | process.detect_markers |

File with information about marker pose w.r.t. the (scene) camera for each frame where the marker was detected. |

planeGaze_<plane name>.tsv |

recording | process.gaze_to_plane |

File with gaze data projected to the plane/surface. Only for eye tracker recordings. |

validate_<plane name>_* |

recording | process.run_validation |

Series of files with output of the glassesValidator validation procedure. See the glassesValidator readme for descriptions. Only for eye tracker recordings. |

VOR_sync.tsv |

recording | process.sync_et_to_cam |

File containing the synchronization offset (s) between eye tracker data and the scene camera. Only for eye tracker recordings. |

detectOutput.mp4 |

recording | process.make_video |

Video of the eye tracker scene camera or external camera (synchronized to one of the recordings if there are multiple) showing detected plane origins, detected individual markers and gaze from any other recordings eye tracker recordings. Also shown for eye tracker recordings are gaze on the scene video from the eye tracker, gaze projected to the detected planes. Each only if available, and enabled in the video generation settings. |

session.gazeMapper |

session | Session.import_recording |

JSON file encoding the state of each session-level gazeMapper action. |

ref_sync.tsv |

session | process.sync_to_ref |

File containing the synchronization offset (s) and other information about sync between multiple recordings. |

planeGaze_<recording name>.tsv |

session | process.export_trials |

File containing the gaze position on one or multiple planes. One file is created per eye tracker recording. |

gazeMapper produces data in the reference frame of a plane/surface. This 2D data is stored in the planeGaze_* files produced when exporting the gazeMapper results, and also in the planeGaze_* files stored inside individual recordings' working folders.

The gaze data in these files has its origin (0,0) at a position that is specified in the plane setup (or in the case of a glassesValidator poster at the center of the fixation target that was indicated to be the center target with the centerTarget setting in the validation poster's validationSetup.txt configuration file). The positive x-axis points to the right and the positive y-axis

downward, which means that (-,-) coordinates are to the left and above of the plane origin, and (+,+) to the right and below.

gazeMapper supports the following eye trackers:

- AdHawk MindLink

- Pupil Core

- Pupil Invisible

- Pupil Neon

- SeeTrue STONE

- SMI ETG 1 and ETG 2

- Tobii Pro Glasses 2

- Tobii Pro Glasses 3

Pull requests or partial help implementing support for further wearable eye trackers are gladly received. To support a new eye tracker, implement it in glassesTools.

For some eye trackers, the recording delivered by the eye tracker's recording unit or software can be directly imported into gazeMapper. Recordings from some other eye trackers however require some steps to be performed in the manufacturer's software before they can be imported into gazeMapper. These are:

- Pupil Labs eye trackers: Recordings should either be preprocessed using Pupil Player (Pupil Core and Pupil Invisible),

Neon Player (Pupil Neon) or exported from Pupil Cloud (Pupil Invisible and Pupil Neon).

- Using Pupil Player (Pupil Core and Pupil Invisible) or Neon player (Pupil Neon): Each recording should 1) be opened

in Pupil/Neon Player, and 2) an export of the recording (

ehotkey) should be run from Pupil/Neon Player. Make sure to disable theWorld Video Exporterin thePlugin Managerbefore exporting, as the exported video is not used by glassesTools and takes a long time to create. If you want pupil diameters, make sure to enableExport Pupil Positionsin theRaw Data Exporter, if available. Note that importing a Pupil/Neon Player export of a Pupil Invisible/Neon recording may require an internet connection. This is used to retrieve the scene camera calibration from Pupil Lab's servers in case the recording does not have acalibration.binfile. - Using Pupil Cloud (Pupil Invisible and Pupil Neon): Export the recordings using the

Timeseries data + Scene videoaction. - For the Pupil Core, for best results you may wish to do a scene camera calibration yourself, see https://docs.pupil-labs.com/core/software/pupil-capture/#camera-intrinsics-estimation. If you do not do so, a generic calibration will be used by Pupil Capture during data recording, by Pupil Player during data analysis and by the glassesTools processing functions, which may result in incorrect accuracy values.

- Using Pupil Player (Pupil Core and Pupil Invisible) or Neon player (Pupil Neon): Each recording should 1) be opened

in Pupil/Neon Player, and 2) an export of the recording (

- SMI ETG: For SMI ETG recordings, access to BeGaze is required and the following steps should be performed:

- NB: Note that it is critical that a

SYNC_ET_TO_CAMaction is performed for SMI ETG recordings. This is required because the gaze timestamps in the export file created below have an unknown zero that is not related to the scene video clock, and no info about offset between these clocks is available. The gaze signal and video feed have to be manually aligned (synchronized). - Export gaze data:

Export->Legacy: Export Raw Data to File.- In the

Generaltab, make sure you select the following:Channel: enable both eyesPoints of Regard (POR): enableGaze position,Eye position,Gaze vectorBinocular: enableGaze positionMisc Data: enableFrame counter- disable everything else

- In the Details tab, set:

Decimal placesto 4Decimal separatortopointSeparatortoTab- enable

Single file output

- This will create a text file with a name like

<experiment name>_<participant name>_<number> Samples.txt(e.g.005-[5b82a133-6901-4e46-90bc-2a5e6f6c6ea9]_005_001 Samples.txt). Move this file/these files to the recordings folder and rename them. If, for instance, the folder contains the files005-2-recording.avi,005-2-recording.idfand005-2-recording.wav, amongst others, for the recording you want to process, rename the exported samples text file to005-2-recording.txt.

- In the

- Export the scene video:

- On the Dashboard, double click the scene video of the recording you want to export to open it in the scanpath tool.

- Right click on the video and select settings. Make the following settings in the

Cursortab:- set

Gaze cursortotranslucent dot - set

Line widthto 1 - set

Sizeto 1

- set

- Then export the video,

Export->Export Scan Path Video. In the options dialogue, make the following settings:- set

Video Sizeto the maximum (e.g.(1280,960)in my case) - set

Frames per secondto the framerate of the scene camera (24 in my case) - set

EncodertoPerformance [FFmpeg] - set

QualitytoHigh - set

Playback speedto100% - disable

Apply watermark - enable

Export stimulus audio - finally, click

Save as, navigate to the folder containing the recording, and name it in the same format as the gaze data export file we created above but replacingrecordingwithexport, e.g.005-2-export.avi.

- set

- NB: Note that it is critical that a

A gazeMapper session represents a single recording session. It may consist of only a single eye tracker recording, but could also contain multiple (simultaneous) eye tracker and external camera recordings. When setting up a gazeMapper project, one first defines what recordings to expect for a recording session. This is done in the Session definition pane in the GUI or by means of a gazeMapper.session.SessionDefinition object. A session definition contains a list of expected recordings, which are defined using the + new recording button on the Session definition pane in the GUI, or by means of gazeMapper.session.RecordingDefinition objects passed to gazeMapper.session.SessionDefinition.add_recording_def(). Each recording definition has a name and an eye tracker type (gazeMapper.session.RecordingType), where the type can be an eye tracker recording (gazeMapper.session.RecordingType.Eye_Tracker) or a (external) camera recording (gazeMapper.session.RecordingType.Camera). This session definition is typically stored in a JSON file session_def.json in the configuration directory of a gazeMapper project.

As outlined above, each gazeMapper session is its own folder inside a gazeMapper project. The name of the session is simply the name of the folder (which we will term the session working folder). You can thus rename a session by renaming its working folder. Similarly, each recording's working folder is stored inside the session working folder, with as folder name the name defined for the corresponding recording in the session definition. You are advised not to manually rename these folders, as folders with a name different than that defined in the session definition are not recognized by gazeMapper.

When opening a gazeMapper project folder, each subfolder of the project folder containing a session.gazeMapper status file is taken to be a session, regardless of whether it has recording working folders or not. Similarly, recording working folders in a session working folder with names that match the recordings defined in the project's session definition will be loaded automatically.

The main goal of gazeMapper is to map head-referenced gaze data recording with a wearable eye tracker to one or multiple planes in the world. That means that gazeMapper determines where on the plane a participant looks, regardless of where in their visual field the plane appears, and that gazeMapper's output expresses gaze in the plane's reference frame. To be able to perform this mapping, gazeMapper needs to be able to determine where the participant is located in space and how they are oriented with respect to the plane (that is, their pose). This is done by placing an array of fiducial markers of known size and known spatial layout on the plane that can be detected through computer vision and used to determine the participant's pose. See the example above for what such an array of fiducial markers looks like.

For gazeMapper to be able to do its job, it needs to have precise information about the array of fiducial markers that defines the plane(s). When designing these arrays, it is important to use unique markers (in other words, each marker may only be used once across all planes and other markers that appear in the recording, e.g. for synchronization or automatic trial coding). Any dictionary of fiducial markers understood by OpenCV's ArUco module (cv2.aruco, see cv::aruco::PREDEFINED_DICTIONARY_NAME) is supported (i.e. various ArUco marker dictionaries, as well as April tags), the default is DICT_4X4_250.

Planes are configured in the Plane editor pane in the GUI or by means of gazeMapper.plane.Definition objects. There are two types of planes, either a generic 2D plane (gazeMapper.plane.Type.Plane_2D), or a glassesValidator plane (gazeMapper.plane.Type.GlassesValidator). The configuration of a plane is stored in a subfolder of the project's configuration folder. The name of the plane is given by the name of this folder. For generic 2D planes, two configuration files are needed: a file providing information about which marker is positioned where and how each marker is oriented; and a settings file containing further information about both the markers and the plane. glassesValidator planes have their own settings and are discussed below. Here we describe the setup for generic 2D planes. It should be noted that a png render of the defined plane is stored in the plane's configuration folder when running any gazeMapper processing action, or by pressing the generate reference image button in the GUI. This can be used to check whether your plane definition is correct.

A generic 2D fiducial marker plane is defined by a file with four columns that describes the marker layout on the plane:

| Column | Description |

|---|---|

ID |

The marker ID. Must match a valid marker ID for the marker dictionary employed, and must be unique throughout the project. |

x |

The horizontal location of the marker's center on the plane (mm). |

y |

The vertical location of the marker's center on the plane (mm). |

rotation_angle |

The rotation of the marker, if any (degree). |

A file with this information should be stored under any name (e.g., markerPositions.csv) in the plane's configuration folder inside the project's configuration folder. See the example data for an example of such a file (TODO).

To be able turn the information of the above file into a plane, further settings are needed:

| Setting | Description |

|---|---|

marker_file |

Name of the file specifying the marker layout on the plane (e.g., markerPositions.csv). |

marker_size |

Length of the edge of a marker (mm, excluding the white edge, only the black part). |

marker_border_bits |

Width of the black border around each marker. |

plane_size |

Total size of the plane (mm). Can be larger than the area spanned by the fiducial markers. |

origin |

The position of the origin of the plane (mm). |

unit |

Unit in which sizes and coordinates are expressed. Purely for informational purposes, not used in the software. Should be mm. |

aruco_dict |

The ArUco dictionary (see cv::aruco::PREDEFINED_DICTIONARY_NAME) of the markers. |

ref_image_size |

The size in pixels of the image that is generated of the plane with fiducial markers. |

These settings are typically stored in a file plane_def.json in the plane's configuration folder inside the project's configuration folder.

gazeMapper has built-in support for computing data quality from the gaze data of a participant looking at a validation poster using glassesValidator. To use this functionality, a plane of type GlassesValidator (gazeMapper.plane.Type.GlassesValidator) needs to be defined in the project's setup. By default, the default glassesValidator plane is used for a GlassesValidator plane. When the default checkbox is unchecked in the GUI (the is_default setting in plane_def.json is False), a custom configuration can be used. When unchecking this checkbox in the GUI, files containing the plane setup are deployed to the plane configuration folder, so that the user can edit or replace them. API users are requested to call glassesValidator.config.deploy_validation_config() to deploy the glassesValidator configuration files to the plane's configuration folder. The customization options for a glassesValidator plane are documented here.

Besides planes, gazeMapper can also be configured to detect and report on the appearance of individual markers. This is configured in the Individual markers editor pane in the GUI or by means of gazeMapper.marker.Marker objects.

gazeMapper can perform the following processing actions on a wearable eye tracking data. Some, like detecting the fiducial markers and projecting gaze data to the plane(s), are always available, some other actions are only available when certain settings are enabled. Unavailable actions are not shown in the GUI. Some actions depend on the output of other actions. Such actions whose preconditions have not been met cannot be started from the GUI. Some actions are performed on a gazeMapper session (e.g., SYNC_TO_REFERENCE and MAKE_VIDEO) whereas others are run on one recording at a time (e.g., CODE_EPISODES and DETECT_MARKERS). The former will be referred to as session-level actions, the latter as recording-level actions. API use is not gated by such checks, but errors may be raised due to, for instance, missing input files. All available actions (gazeMapper.process.Action) are listed in the table below, more details about some of these processing actions are provided in the section below.

| Action | Availability | Level | Description |

|---|---|---|---|

IMPORT |

always | recording | Import a recording from a source directory to a recording working directory, which includes casting the eye tracker-specific data format into the glassesTools common format used by gazeMapper. |

CODE_EPISODES |

always | recording | Code analysis, synchronization and validation episodes in a recording. Shows a coding GUI. |

DETECT_MARKERS |

always | recording | Detect fiducial markers and determine participants pose for one or multiple planes and individual markers. |

GAZE_TO_PLANE |

always | recording | Mapping head-referenced gaze to one or multiple planes. |

AUTO_CODE_SYNC |

auto_code_sync_points option |

recording | Automatically find sync points in the scene/external camera videos. Only makes sense to perform if there are multiple recordings in a session, since otherwise there is nothing to synchronize. |

AUTO_CODE_TRIALS |

auto_code_trial_episodes option |

recording | Automatically find trial start and ends using fiducial markers in the scene camera. |

SYNC_ET_TO_CAM |

get_cam_movement_for_et_sync_method option |

recording | Synchronize gaze data to the scene camera. Shows a GUI for manually performing this synchronization. |

SYNC_TO_REFERENCE |

sync_ref_recording option |

session | Synchronize the gaze data and cameras of multiple recordings. Only makes sense to perform if there are multiple recordings in a session, since otherwise there is nothing to synchronize. |

RUN_VALIDATION |

plane setup and episode coding setup | recording | Run glassesValidator to compute data quality from the gaze data of a participant looking at a validation poster. |

EXPORT_TRIALS |

always | session | Create file for each recording containing the gaze position on one or multiple planes. |

MAKE_VIDEO |

video_make_which option |

session | Make videos of the eye tracker scene camera or external camera (synchronized if there are multiple) showing gaze on the scene video from the eye tracker, gaze projected to the detected planes, detected plane origins, detected individual markers, and gaze from other eye tracker recordings (if available). |

In the GUI, an overview of what processing actions are enqueued or running for a session or recording are shown in the Sessions pane, in the detail view for a specific session, and in the Processing queue pane.

To process a recording, gazeMapper needs to be told where in the recording several events are, such as the trial(s) for which you want to have gaze data mapped to the plane. There are four types of episodes (glassesTools.annotation.Event) that can be coded, which are available depends on the set of events that are set to be coded for the project with the episodes_to_code setting. Some may not be of use, depending on the configuration of the gazeMapper project:

| Episode | Availability | Description |

|---|---|---|

Trial |

always | Denotes an episode for which to map gaze to plane(s). This determines for which segments there will be gaze data when running the gazeMapper.process.Action.EXPORT_TRIALS action. |

Validate |

plane setup | Denotes an episode during which a participant looked at a validation poster, to be used to run glassesValidator to compute data quality of the gaze data. |

Sync_Camera |

sync_ref_recording option |

Time point (frame from video) when a synchronization event happened, used for synchronizing different recordings. |

Sync_ET_Data |

get_cam_movement_for_et_sync_method option |

Episode to be used for synchronization of eye tracker data to scene camera (e.g. using VOR). |

Furthermore, the Trial, Validate and Sync_Camera episodes need to be associated with one or multiple planes to be detected during these episodes. Which planes to detect per episode is set up with the planes_per_episode setting.