function g = sigmoid(z)

g = ones(size(z))./(1 + exp(-z));

end

J = mean((-y).* log(sigmoid(X*theta))- (1-y).* log(1 - sigmoid(X*theta)));

grad = 1/m * X' * (sigmoid(X*theta) - y);

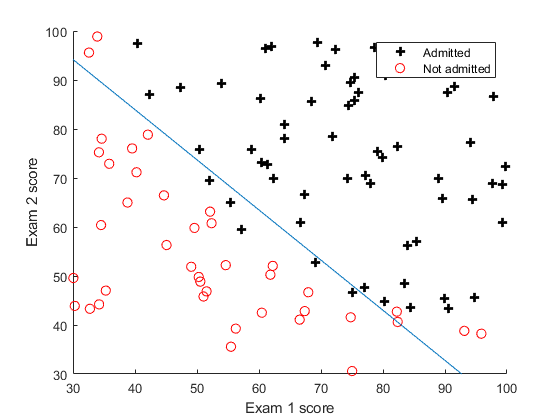

MATLAB's fminunc is an optimization solver that finds the minimum of an uncinstrained function. fminunc input:

- The initial value of the parameters we are trying to optimize

- A funtion computes the cost function and gradient

% Set options for fminunc

options = optimset('GradObj', 'on', 'MaxIter', 400);

% Run fminunc to obtain the optimal theta

% This function will return theta and the cost

[theta, cost] = ...

fminunc(@(t)(costFunction(t, X, y)), initial theta, options);

'GradObj', 'on': set fminunc that our function returns both the cost and the gradient. This allows fminunc to use the gradient when minimizing the function.

'MaxIter', 400: set fminunc run for at most 400 steps before it terminates.

@(t) ( costFunction(t, X, y) ): specify the actual function we are minimizing, creates a function, with argument t, which calls your costFunction.

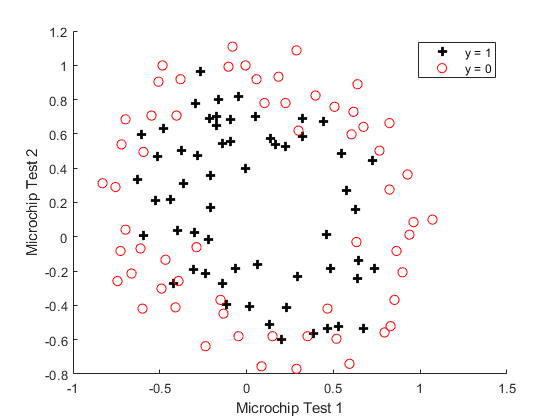

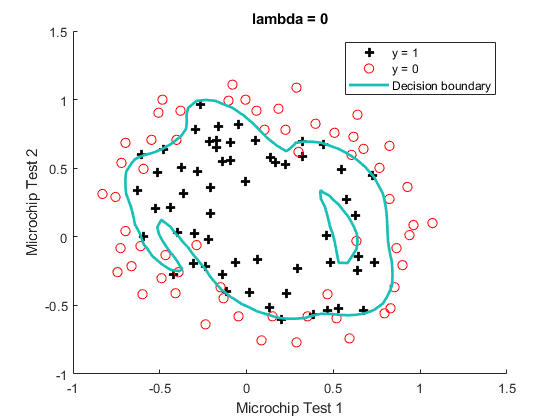

% Inputs X1, X2 must be the same size

% Returns a new feature array with more features, comprising of

% X1, X2, X1.^2, X2.^2, X1*X2, X1*X2.^2, etc..

degree = 6;

out = ones(size(X1(:,1)));

for i = 1:degree

for j = 0:i

out(:, end+1) = (X1.^(i-j)).*(X2.^j);

end

end

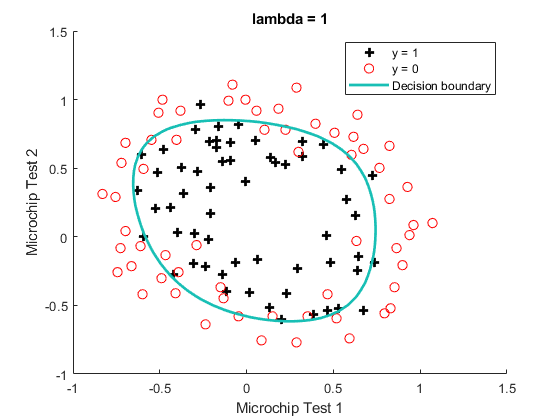

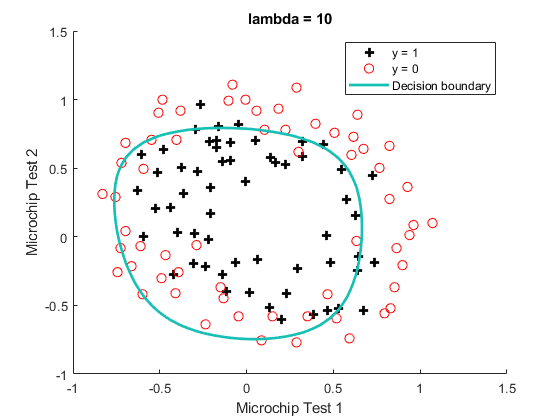

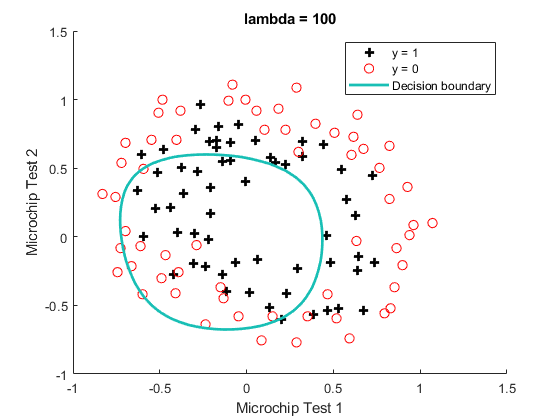

theta0 = [0; theta(2:end)];

J = mean((-y).* log(sigmoid(X*theta))- (1-y).* log(1 - sigmoid(X*theta)))...

+ lambda/(2*m)*sum(theta0.*theta0);

grad = 1/m * X' * (sigmoid(X*theta) - y) + lambda/m*theta0;

% Set Options

options = optimset('GradObj', 'on', 'MaxIter', 400);

% Optimize

[theta, J, exit_flag] = ...

fminunc(@(t)(costFunctionReg(t, X, y, lambda)), initial_theta, options);